Towards a Personal Health LLM

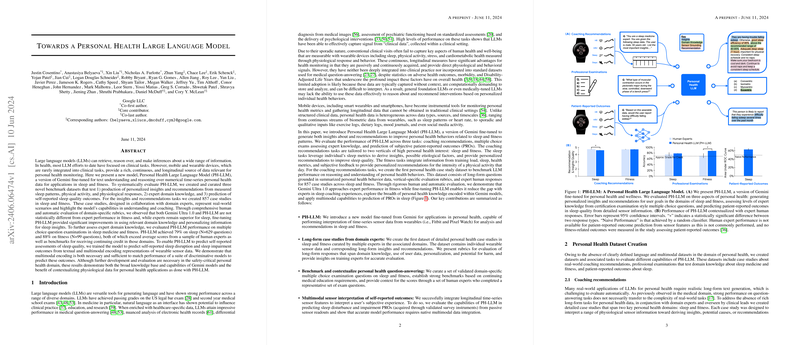

The paper "Towards a Personal Health LLM" introduces PH-LLM, a fine-tuned version of the Gemini model designed to interpret and provide insights from numerical time-series personal health data. This model is specifically tailored for applications in sleep and fitness, emphasizing the integration of data from mobile and wearable devices. The authors argue that such continuous, longitudinal personal health data have been underutilized in clinical practice but hold significant potential for personalized health monitoring.

Model Development and Evaluation

PH-LLM was fine-tuned to generate personalized insights and recommendations based on measured sleep patterns, physical activity, and physiological responses. To evaluate its performance, the authors curated three novel benchmark datasets that address:

- Production of personalized insights and recommendations

- Expert domain knowledge

- Prediction of self-reported sleep quality outcomes

These datasets comprised 857 case studies in sleep and fitness, co-designed with domain experts to represent real-world scenarios. The model's performance was compared against human experts and evaluated on multiple metrics, including domain-specific rubrics.

Performance and Results

Insights and Recommendations:

- PH-LLM showed a strong capability to match expert performance in generating sleep and fitness insights. While Gemini Ultra 1.0 was comparable to human experts in fitness, PH-LLM demonstrated statistically significant improvements over the base model in sleep-related tasks following fine-tuning.

- Expert evaluations rated the model's outputs highly, particularly noting its ability to reference important data and use domain-relevant knowledge effectively.

Expert Knowledge:

- PH-LLM's performance on professional multiple-choice question examinations was impressive, achieving 79% on sleep knowledge questions (N=629) and 88% on fitness questions (N=99). These scores surpassed the average scores from human experts and exceeded benchmarks required for continuing education credits in these domains.

Prediction of Self-Reported Outcomes:

- The paper evaluated PH-LLM's predictive power for self-reported sleep disruption and impairment outcomes based on textual and multimodal sensor data encodings. Results indicated that multimodal encoding was both necessary and sufficient to achieve performance comparable to specialized discriminative models.

- This aspect of the paper underscores PH-LLM's potential to bridge objective measurements with subjective well-being assessments—a critical step toward comprehensive personal health management.

Implications and Future Directions

The research demonstrates the utility of LLMs in personal health domains, highlighting several key contributions:

- Model Fine-tuning: The paper underlines the importance of fine-tuning general LLMs with domain-specific data to enhance performance in generating personalized health insights.

- Use of Wearable Data: By incorporating time-series data from wearable devices, PH-LLM positions itself as a valuable tool for ongoing health monitoring, offering continuous, individualized insights absent in traditional clinical settings.

- Evaluation Methodology: The creation of case studies and rigorous evaluation rubrics provides a robust framework for assessing LLMs in health applications, with implications for future model development.

Challenges and Considerations

The paper also discusses challenges such as:

- Understanding Context: Experts noted difficulties in providing precise recommendations due to the lack of comprehensive contextual information about the users' lifestyles.

- Consistency: Some model-generated responses lacked internal consistency across sections, which affected their overall quality despite individual sections being well-rated.

- Addressing Confabulations: Ensuring factual accuracy and preventing confabulations remains an ongoing challenge for LLMs, particularly in the safety-critical domain of personal health.

Conclusion

The findings from this paper represent a significant advancement in applying LLMs to personal health domains. By effectively integrating numerical time-series data with domain-specific expertise, PH-LLM not only matches but in some aspects surpasses expert human performance in providing personalized health insights and recommendations. This work sets the stage for future developments in AI-driven personal health monitoring, emphasizing the potential of LLMs to enhance individualized healthcare. Further research will be necessary to address the challenges identified and to ensure the safe and effective deployment of these models in real-world applications.