The paper "MAPO: Advancing Multilingual Reasoning through Multilingual Alignment-as-Preference Optimization" (She et al., 12 Jan 2024 ) addresses the observed disparity in reasoning capabilities of LLMs across different languages. While reasoning is often considered language-agnostic, LLMs frequently demonstrate superior performance in high-resource languages like English compared to lower-resource languages, attributed largely to imbalances in multilingual training corpora. The work introduces the Multilingual-Alignment-as-Preference Optimization (MAPO) framework designed to mitigate this gap by aligning the reasoning processes in non-dominant languages with those generated in a dominant language (English).

MAPO Framework Methodology

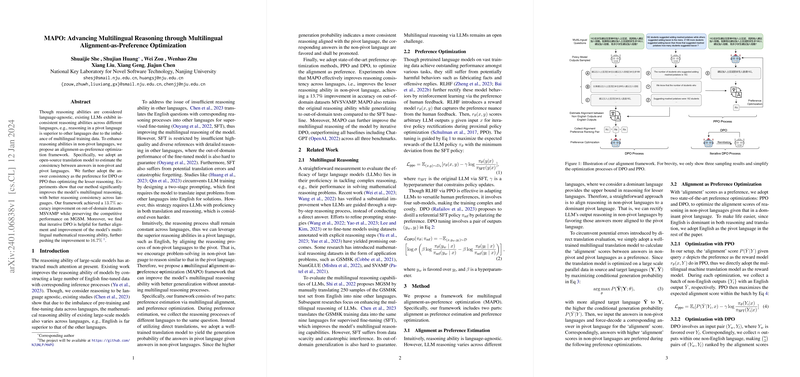

The MAPO framework operates by generating a preference signal based on the alignment between reasoning chains produced in a non-dominant language and a corresponding chain in the dominant language for the same input query. This preference signal is then used to fine-tune the LLM using standard preference optimization algorithms like Proximal Policy Optimization (PPO) or Direct Preference Optimization (DPO). The core idea is that a non-dominant language reasoning process that is semantically closer or more "translatable" to the dominant language reasoning process (assumed to be more reliable) is preferred.

Preference Estimation via Multilingual Alignment

- Data Generation: For a given input question (e.g., a mathematical problem), the LLM policy (initially an SFT model) generates a reasoning process in the dominant language (English) and multiple reasoning process samples in a non-dominant target language.

- Alignment Scoring: An off-the-shelf multilingual translation model is employed to assess the alignment between each non-dominant language reasoning process and the dominant language reasoning process . The alignment score is typically derived from the negative log-likelihood or perplexity of translating to , essentially . A higher probability (lower perplexity) signifies better alignment and is interpreted as higher preference.

- Preference Data Formulation:

- For PPO, the alignment score serves directly as the reward for generating .

- For DPO, the sampled non-dominant reasoning processes are ranked based on their alignment scores. Pairs are constructed where has a higher alignment score (winner) than (loser), forming the preference dataset .

Preference Optimization

The estimated preferences are used to fine-tune the LLM :

- Using PPO: The objective is to maximize the expected reward obtained from the alignment score, while regularizing the policy shift using a KL divergence penalty against the initial SFT policy :

Here, is the alignment-based reward.

- Using DPO: The DPO loss function directly optimizes the policy to increase the likelihood of preferred sequences over dispreferred sequences , relative to a reference policy (typically the frozen SFT model):

where is the sigmoid function and is a hyperparameter controlling the deviation from the reference policy.

- Iterative DPO (iDPO): The paper also explores an iterative application of DPO, where the model optimized in one iteration () is used as the sampling policy to generate data for the next DPO iteration (), potentially leading to progressive refinement.

Experimental Setup

- Base Models: The experiments utilized LLaMA2 (7B, 13B) and Mistral (7B) models, specifically versions already fine-tuned for multilingual mathematical reasoning (MathOctopus, MetaMathOctopus, MistralMathOctopus) via supervised fine-tuning (SFT) on translated reasoning data (MGSM8KInstruct).

- MAPO Training Data: Preference data for MAPO was generated using mathematical problems from a subset of NumGLUE (tasks 1, 4, 8), translated into 9 non-English languages (part of MNumGLUESub). This dataset was distinct from the initial SFT dataset.

- Alignment Model: The NLLB-600M-distilled model was used as the default for calculating alignment scores.

- Evaluation Benchmarks: Performance was evaluated on three multilingual mathematical reasoning datasets covering 10 languages (English + 9 non-English):

- MSVAMP: Out-of-domain multi-step arithmetic word problems.

- MGSM: Multi-step grade school math problems (in-domain relative to SFT).

- MNumGLUESub: Numerical reasoning problems (in-domain relative to MAPO preference data generation).

- Metrics:

- Accuracy: Percentage of correctly solved problems.

- PPL-based Alignment Score: Average perplexity assigned by the NLLB model between non-English and English reasoning chains (lower is better), measuring reasoning process consistency.

- Answer Consistency Ratio (ACR): Jaccard index between the sets of problems solved correctly in English versus a non-English language, measuring answer consistency.

- Baselines: Performance was compared against the base SFT models and m-RFT (Rejection sampling Fine-Tuning based on final answer correctness).

Results and Findings

MAPO demonstrated significant improvements in multilingual reasoning performance across various base models and benchmarks:

- Accuracy Gains: On average across 9 non-English languages, MAPO applied to MathOctopus 7B yielded substantial improvements:

- +16.2% on MSVAMP (out-of-domain)

- +6.1% on MGSM (in-domain SFT)

- +13.3% on MNumGLUESub (in-domain MAPO)

- Similar gains were observed for larger models (13B) and Mistral-based models. Notably, the largest gains were often seen in languages with lower baseline performance (e.g., Bengali, Thai, Swahili).

- Improved Generalization: The strong performance increase on the out-of-domain MSVAMP dataset suggests MAPO fosters better generalization of reasoning skills compared to methods like m-RFT, which showed negligible improvement on MSVAMP.

- Enhanced Consistency: MAPO led to improved reasoning consistency between non-dominant languages and English, as evidenced by:

- Lower (better) PPL-based alignment scores.

- Higher Answer Consistency Ratio (ACR).

- This indicates that MAPO successfully aligns not just the final answers but also the intermediate reasoning steps.

- PPO vs. DPO: Both PPO and DPO implementations of MAPO proved effective. DPO appeared slightly more sample-efficient in early training stages, while iterative DPO showed potential for further gains.

- Ablation Studies: Ablations confirmed the importance of using alignment scores over simpler rewards (like final answer correctness used in m-RFT) and the benefit of using translated dominant language reasoning () compared to reference solutions.

Significance and Implications

The MAPO framework offers a practical approach to enhance LLM reasoning in non-dominant languages without requiring costly human annotations of reasoning steps in multiple languages. By leveraging the stronger reasoning capabilities typically present in a dominant language like English and using automated translation models to create an alignment-based preference signal, MAPO effectively transfers reasoning proficiency.

The method's success, particularly on out-of-domain tasks, indicates that optimizing for cross-lingual reasoning alignment encourages the model to learn more fundamental, language-agnostic reasoning patterns rather than merely overfitting to specific language data. The explicit optimization towards consistency leads to more reliable and predictable reasoning behavior across the supported languages. This alignment strategy provides a scalable way to improve the equity of reasoning performance in multilingual LLMs.

In conclusion, MAPO presents a novel preference optimization strategy centered on cross-lingual reasoning alignment. By using translation models to generate preference signals comparing non-dominant language reasoning to dominant language reasoning, it substantially improves multilingual mathematical reasoning accuracy and consistency, demonstrating effectiveness across different base models and benchmarks, especially enhancing generalization to out-of-domain tasks.