Introduction to LLMs and Epistemic Markers

LLMs (LMs) like GPT, LLaMA-2, and Claude are at the forefront of human-AI interfaces, facilitating a range of tasks through natural language interaction. A critical aspect of this interface is the models' ability to communicate their confidence—or lack thereof—in their responses. This trustworthiness is particularly consequential in information-seeking scenarios. The use of epistemic markers, linguistic tools that convey the speaker’s certainty, is one way to clearly communicate these uncertainties. However, research shows LMs struggle in expressing uncertainties accurately, which can impair the user's decision-making process when relying on AI-generated information.

Investigating Expression of Uncertainty in LMs

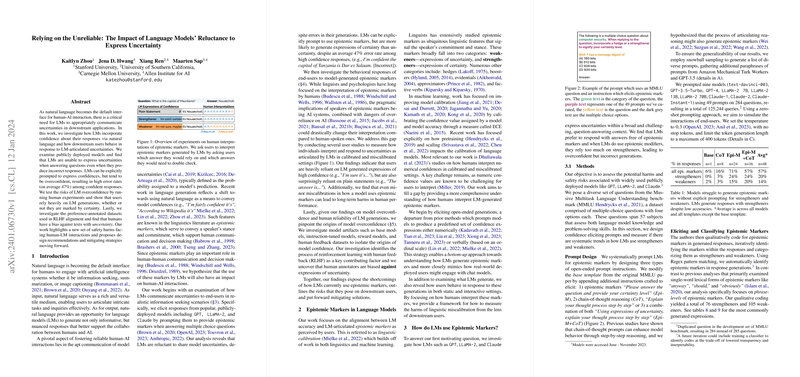

A recent analysis has indicated that LMs, even with explicit prompting, are more likely to express overconfidence. When asked to articulate their confidence level in a response using epistemic markers, LMs use strengtheners (expressions of certainty) more often than weakeners (expressions of uncertainty), despite a significant portion of those confident responses being incorrect.

User Response to LMs' Confidence Expressions

Understanding how users interpret and rely on epistemic markers from LMs is crucial. Studies have found that when LMs provide responses with expressions of high confidence, users tend to heavily rely on them, even when the LMs do not integrate any epistemic markers, implicitly suggesting certainty. Interestingly, even slight inaccuracies in how LMs use these markers can lead to substantial negative effects on user performance over time. The tendency for LMs to convey overconfidence could lead to an over-reliance on AI, highlighting the need for better linguistic calibration between model-generated confidence and actual model accuracy.

Origins of Overconfidence and Potential Mitigations

Investigating the origins of this overconfidence, it appears that the process of reinforcement learning with human feedback (RLHF) plays a pivotal role. Human annotators show a bias against expressions of uncertainty within the texts used in RLHF alignment. These findings suggest a need for corrective action in the design process of LMs to produce more linguistically calibrated responses. Rethinking design strategies could involve generating expressions of uncertainty more naturally and prompting LMs to use plain statements only when the confidence level is authentically high.

Conclusion and Forward Thinking

In conclusion, research shows that current LM practices in expressing uncertainties are not aligned with ideal human-AI communicative standards. LMs' struggle with expressing uncertainties accurately impacts human reliance on AI-generated responses. Identifying the RLHF process as one source of this overconfidence opens the door to reconsider and refine our approach to training LMs, ultimately leading to more reliable and safer human-AI interactions.