Rank-Calibration: A Framework for Assessing Uncertainty in LLMs

Introduction

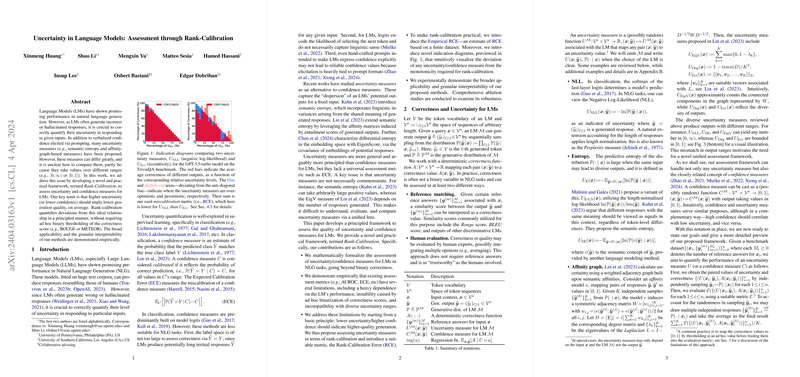

LLMs (LMs), specifically LLMs, have significantly advanced the field of Natural Language Generation (NLG). Despite their potential, these models often produce incorrect or hallucinated responses. It is, therefore, crucial to accurately quantify the level of uncertainty in their outputs. This work introduces a novel framework known as Rank-Calibration for the assessment of uncertainty and confidence measures for LMs in NLG tasks. The framework is built on the principle that lower uncertainty or higher confidence should ideally correlate with higher generation quality. Utilizing the Rank-Calibration Error (RCE) metric, this framework offers a principled approach to quantify deviations from the ideal relationship between uncertainty levels and generation quality.

Uncertainty Measures for LLMs

Existing uncertainty measures for LMs focus on capturing the dispersion of potential outputs for a given input. Notable among these are semantic entropy, which accounts for linguistic invariances among generated responses, and affinity-graph-based measures that leverage the structural properties of response similarities. The diversity in these measures' output ranges and their conceptual bases necessitates a universal assessment framework that can adapt to their inherent differences.

The Rank-Calibration Framework

The Rank-Calibration framework assesses the quality of uncertainty measures based on the principle that higher-quality generations should correspond to lower uncertainty levels. This is encapsulated in the Rank-Calibration Error (RCE) metric, which quantifies the deviation from the desired monotonic relationship between uncertainty levels and expected generation quality. The framework extends to assess confidence measures by evaluating deviations from expected versus observed confidence levels.

Empirical RCE and Indication Diagrams

To practically implement the Rank-Calibration framework, the empirical RCE is introduced, utilizing a piecewise constant regression strategy. This involves binning uncertainty values and calculating average correctness within each bin to estimate the ideal monotonic relationship. Additionally, indication diagrams provide visual insights into the performance of uncertainty measures, highlighting regions of over-optimism or pessimism in uncertainty estimations.

Experimental Demonstration

Through comprehensive experiments, the Rank-Calibration framework's wider applicability and interpretability are showcased. The framework's robustness is further validated across varying LMs, datasets, and correctness measures. Notably, the empirical RCE enables a detailed analysis of uncertainty measures' performance, identifying those that consistently align with the expectation of lower uncertainty correlating with higher generation quality.

Theoretical Insights

The notion of Rank-Calibration extends beyond current calibration concepts in classification tasks, offering a more generalized perspective on measuring uncertainty, especially in NLG tasks. This work demonstrates that good rank-calibration in uncertainty measures can be achieved through post-hoc recalibration, improving alignment with generation quality expectations.

Conclusion and Future Directions

The Rank-Calibration framework introduces a novel and effective approach to assessing uncertainty and confidence in LMs. By focusing on the rank-order of uncertainty levels relative to generation quality, this framework provides a more interpretable and adaptable method for evaluating LM outputs. Future research directions include developing inherently rank-calibrated uncertainty measures and integrating rank-calibration into generative pipelines for LMs, aiming to enhance the reliability and usefulness of generated responses in practical applications.