Introduction

The landscape of natural language processing and artificial intelligence has been transformed by the development of LLMs. These models have demonstrated exceptional capabilities in various language tasks, leading to their widespread adoption across industries. However, the growth in utility and application scope is paralleled by increasing concerns about the trustworthiness of LLMs. Issues such as transparency, ethical alignment, and robustness to adversarial inputs have prompted researchers to thoroughly evaluate the trustworthiness of these models.

Trustworthiness Dimensions

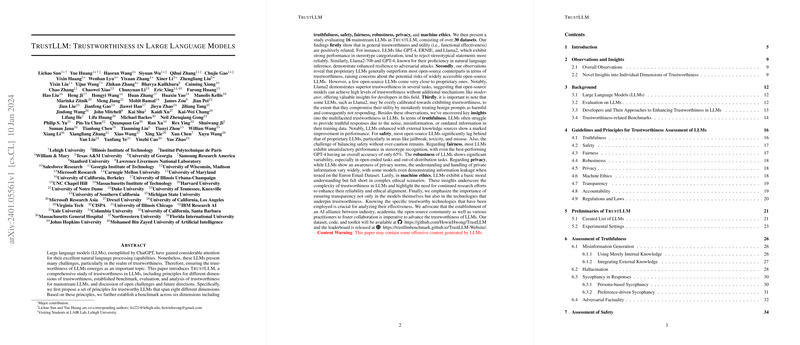

A pivotal aspect of trustworthiness in LLMs is the set of principles spanning eight dimensions: truthfulness, safety, fairness, robustness, privacy, machine ethics, transparency, and accountability. These principles guide the comprehensive analysis of trustworthiness and serve as a benchmark for assessing LLMs. The paper "TRUST LLM" positions these dimensions at the core of its benchmark framework, aiming to evaluate LLMs against these multifaceted criteria.

Assessment Approach

In the evaluation process, LLMs are subjected to a series of tests designed to probe their capacities in handling conceptually and ethically challenging scenarios. The benchmark comprises over 30 datasets and examines LLMs from proprietary to open-source origins on tasks that are both closed-ended with ground-truth labels and open-ended without definitive answers. By applying prompts that are meticulously crafted to minimize prompt sensitivity and provide explicit instructions, this paper ensures that the evaluation captures a reliable measure of each model's performance across the key dimensions.

Insights from Evaluation

The paper identifies several patterns in LLM behavior across the examined dimensions. It reveals a positive relationship between the trustworthiness and utility of LLMs, indicating that stronger-performing models in functional tasks tend to align better with ethical and safety norms. However, the paper also uncovers cases of over-alignment, where some LLMs, in their pursuit of trustworthiness, become overly cautious to the detriment of practical utility. The proprietary LLMs generally outperform open-source ones in trustworthiness, though few open-source models closely compete, demonstrating the possibility of achieving high trustworthiness without proprietary mechanisms. It is highlighted that transparency in trustworthy technologies is integral, advocating for transparent model architectures and decision-making processes to foster a more human-trusted AI landscape.

Conclusion

The "TRUST LLM" paper serves as a foundational work in understanding and improving the trustworthiness of LLMs. By identifying strengths and weaknesses across various trustworthiness dimensions, this paper does not only inform future development of more reliable and ethical LLMs but also underlines the need for an industry-wide effort to advance the field. Through continued research and the establishment of clear benchmarks, we can steer the evolution of LLMs towards models that are not only functionally robust but also ethically sound and societally beneficial.