The paper "Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in LLMs" introduces a novel linear attention mechanism, named Lightning Attention-2, designed to address computational challenges associated with handling long sequences in LLMs. Linear attention provides a theoretical advantage of complexity when computing attention over sequences, circumventing the quadratic time complexity of traditional softmax attention, where signifies sequence length. Despite its theoretical benefits, practical implementation of linear attention in a causal setting has encountered difficulties, mainly due to issues with cumulative summation (cumsum), which hampers parallel computation benefits on hardware.

Key Innovations and Mechanisms

Lightning Attention-2 presents a solution to these challenges by leveraging a "divide and conquer" approach with tiling, facilitating efficient intra-block and inter-block computations:

- Intra-block: Conventional attention operations are applied within small blocks of the sequence to maintain accurate computations.

- Inter-block: Utilizes the associativity of matrix multiplication with kernel tricks, thereby allowing sequences of greater lengths to be processed efficiently.

The system utilizes advanced GPU optimizations, implementing its computation strategy using Triton to be IO-aware and to fully exploit the hardware's capabilities by dividing work between high bandwidth memory and on-chip SRAM efficiently. By separating intra-block (within a block) and inter-block (between blocks) processing, this framework takes full advantage of matrix product properties, which were traditionally hampered by bottleneck memory operations.

Experimental Results

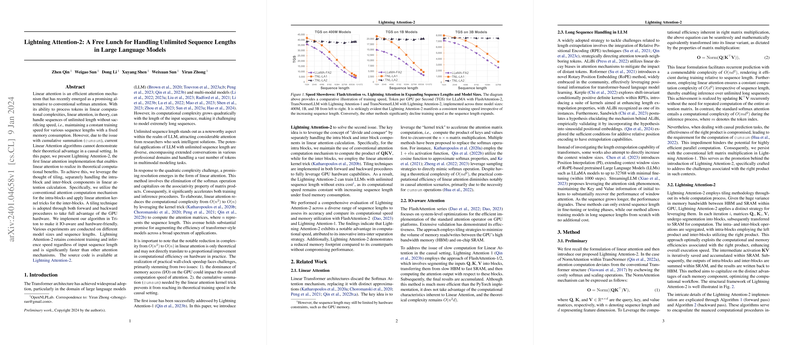

The paper's extensive experimental evaluation compares Lightning Attention-2 to existing attention mechanisms such as FlashAttention-2, demonstrating significant improvements in speed and memory usage, particularly for longer sequences. The empirical evidence shows that:

- Speed: Lightning Attention-2 maintains a high throughput (Token per Second) as sequence length increases, unlike conventional attention mechanisms which see a performance drop with longer sequences.

- Memory Efficiency: It effectively reduces memory consumption during both training and inference phases, leading to better hardware utilization without a decrement in performance accuracy.

- Training Performance: The integration of Lightning Attention-2 within models like TransNormerLLM showcases marginal improvements in predictive accuracy on standard LLMing benchmarks, maintaining competitive performance against state-of-the-art models.

Benchmark Performance

The enhanced efficiency of Lightning Attention-2 allows it to handle extensive datasets with millions of tokens while maintaining robust performance across empirical NLP benchmarks such as Commonsense Reasoning (CSR) tasks and other language understanding challenges. It achieves noteworthy improvements compared to models constrained by conventional attention methods.

Conclusion

Lightning Attention-2 exploits the computational efficiency of linear matrix operations in transformer models, offering a scalable solution to sequence modeling in machine learning environments demanding high-speed data processing across long sequences, with consistent improvements over existing baseline methods. This advancement broadens the practical applicability of LLMs for contexts requiring extensive sequence length handling, thereby unlocking potential capabilities for real-world applications with high demand on data throughput and efficiency.