Efficient LLM Inference on FPGAs: An Examination of FlightLLM

The paper under review, titled "FlightLLM: Efficient LLM Inference with a Complete Mapping Flow on FPGAs," addresses the computational challenges inherent in deploying Transformer-based LLMs by proposing a novel approach leveraging the unique architecture of Field Programmable Gate Arrays (FPGAs). The primary contribution of the work is the development of FlightLLM, a framework designed to improve the efficiency of LLM inference by exploiting FPGA-specific resources and utilizing sophisticated model compression techniques.

Technical Approach

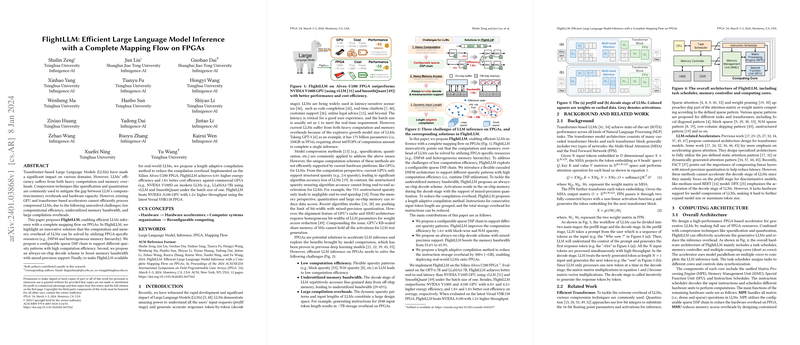

The authors highlight three critical challenges for LLM acceleration: low computational efficiency, underutilized memory bandwidth, and large compilation overheads. The FlightLLM framework addresses these issues through a combination of hardware and software innovations:

- Configurable Sparse DSP Chain: To combat low computational efficiency, FlightLLM introduces a configurable sparse DSP chain that supports various sparsity patterns. This design allows FPGAs to handle flexible block-wise and N:M sparsity, enhancing computation efficiency by increasing DSP utilization rates.

- Always-on-Chip Decode Scheme: For mitigating memory bandwidth underutilization, FlightLLM features an always-on-chip decoding mechanism. This architecture leverages on-chip memory to maintain activation data for LLM inference stages, thus minimizing reliance on slower off-chip memory accesses and supporting mixed-precision quantization.

- Length Adaptive Compilation: To reduce the compilation overhead that arises from handling multiple dynamic input token lengths, the authors propose a length adaptive approach. This method allows for the grouping and reuse of instructions across similar token lengths, substantially cutting down the necessary storage space for operational instructions.

Evaluation and Results

The empirical evaluation of FlightLLM demonstrates significant improvements in both energy and cost efficiency when compared to leading GPUs (e.g., NVIDIA V100S and A100). Specifically, FlightLLM, implemented on a Xilinx Alveo U280 FPGA, achieves up to 6.0 times higher energy efficiency and 1.8 times better cost efficiency for models such as LLaMA2-7B. The system also shows 1.2 times higher throughput than the NVIDIA A100 GPU when deployed on the latest Versal VHK158 FPGA.

Beyond pure performance metrics, the paper also assesses the framework's effectiveness in retaining model accuracy post-compression. Utilizing state-of-the-art compression techniques—sparse attention, weight pruning, and mixed-precision quantization—FlightLLM achieves minimal impact on model perplexity, highlighting the feasibility of these approaches in real-world applications.

Implications and Future Directions

The proposed methods indicate a promising direction for efficient real-time LLM inference, especially in scenarios where latency and computational resources are constrained. By leveraging the reconfigurable nature of FPGAs and combining it with advanced compression techniques, FlightLLM addresses the bottlenecks associated with traditional GPU acceleration of LLMs.

The implications of this work extend to various domains where LLMs are increasingly deployed, including edge computing environments where power efficiency is paramount. As FPGAs become more accessible and their integration with machine learning frameworks improves, the practical adoption of solutions like FlightLLM could continue to rise.

Future research could explore further optimizations, such as more granular sparsity patterns or advanced scheduling algorithms, to push the boundaries of LLM performance on FPGAs. Additionally, the expansion of FlightLLM to support multi-batch processing and broader model varieties could increase its applicability to a wider range of computational tasks in artificial intelligence.