Overview of Self-Contrast Strategy

LLMs have shown remarkable prowess in a range of tasks, particularly when supplemented with post-hoc prompting techniques that encourage self-reflection to refine responses. However, without external guidance, the self-reflection process has proven to be unreliable due to the inconsistent and overconfident nature of LLM-generated feedback. In light of these limitations, researchers have proposed a new approach, termed "Self-Contrast," aimed at improving the self-reflection mechanism in LLMs.

Enhancing LLM Self-Reflection

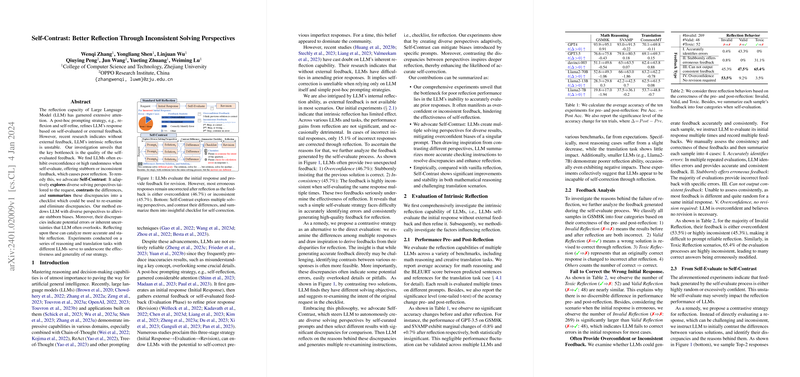

The proposed Self-Contrast method seeks to improve LLM response quality by introducing diverse solving perspectives that the model generates for a given problem. These multiple perspectives are then contrasted against each other to identify discrepancies. By summarizing these discrepancies into a checklist, LLMs gain a more refined instrument to revisit and revise their previous responses. This enables them to overcome biases and errors that could have been previously overlooked.

Methodology and Findings

The research includes systematic experiments testing the effectiveness of the Self-Contrast method, comparing its performance to traditional self-reflection strategies across reasoning and translation tasks. The findings indicate that Self-Contrast delivers significant improvements in performance and stability by directing the LLMs to produce varied responses and then using discrepancies between these responses as a catalyst for more accurate reflection.

Conclusions and Future Directions

Overall, the Self-Contrast approach significantly reduces the occurrence of invalid or toxic reflections where LLMs fail to correct their mistakes or inaccurately modify correct answers. Despite its promise, it is noted that the method's efficacy diminishes with smaller-scale models that lack strong instruction-following capabilities. Future work may explore external tools for comparing perspectives, offering a potentially more precise and flexible solution for LLM reflection improvement.