Introduction to LLM Evaluation

Evaluating LLMs has become a critical component of understanding their capabilities and limitations. Typically, benchmarks use a single instruction template for assessing an LLM's performance on various tasks. However, given the diversity of natural language, even semantically equivalent instructions can alter an LLM's response, raising concerns about the reliability of these evaluations.

Delving into Multi-Prompt Evaluation

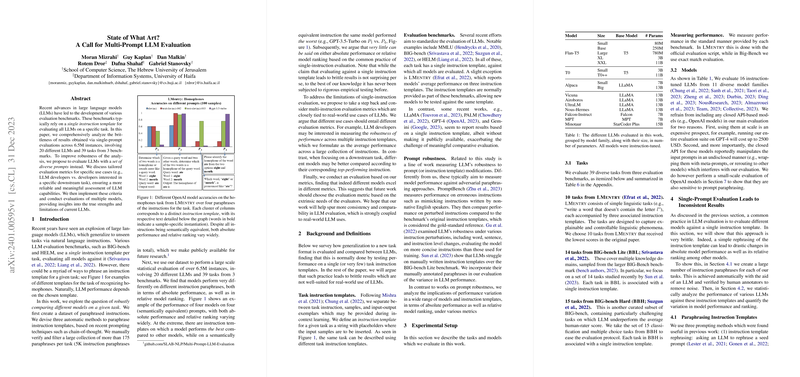

A new paper explores the impact of paraphrasing instructions on the performance of LLMs. The researchers evaluated a staggering 6.5 million instances involving 20 distinct LLMs across 39 tasks from three established benchmarks. Their empirical evidence shows that model performance can change significantly with different paraphrases, challenging previous conclusions drawn from single-prompt evaluations.

The paper introduces a dataset of over 5,000 varied instruction paraphrases, compiled through a mix of automated methods and manual validation. This new approach aims to measure LLM performance more holistically by considering how models respond to a range of possible instructions for each task, rather than to a single, fixed prompt.

Rethinking Evaluation Metrics

The authors argue that a one-size-fits-all evaluation metric is insufficient to capture the nuanced ways in which LLMs can be used. They propose several targeted metrics for LLM evaluation reflecting different use cases—whether it's an LLM developer aiming for robust performance across multiple prompts or a product team seeking the best LLM for a specific downstream task.

Four new metrics were suggested: maximum performance metric for best possible results in specific applications, average performance metric for model robustness assessment, saturation metric for open-ended applications, and a combined performance score to account for peak capabilities and consistency.

The Real-world Implications

After applying these new metrics, the researchers uncovered variations in the absolute performance and model rankings, sometimes diverging from the conventional results obtained from single-prompt evaluations. This observation suggests that the choice of evaluation metric should be aligned with the specific goals and practical needs of the end users.

Developers and researchers may now have to consider multi-prompt evaluations to better understand LLM strengths and weaknesses. Such a comprehensive assessment could inform decisions on model selection for different applications, offering a more nuanced and reliable basis for integration into real-world systems.

Conclusion

The paper presents a strong case for multi-prompt evaluation as a more robust and meaningful way to assess LLM performance. Acknowledging the variability that comes with natural language instructions, it encourages the adoption of evaluation practices that recognize this diversity, thereby aligning LLM assessment with real-world usage and needs. As the field progresses, this approach may prove crucial in accurately gauging the strengths and deploying LLMs effectively for a broad range of tasks and applications.