The paper "Towards a Unified Multimodal Reasoning Framework" addresses the challenges and potential advancements in enhancing the reasoning capabilities of LLMs (LMs) by integrating multimodal data, specifically focusing on the combination of Chain-of-Thought (CoT) reasoning and Visual Question Answering (VQA) techniques.

Key Highlights and Contributions

- Objective and Motivation:

- The primary goal of the paper is to bridge the gap in current LM research by investigating how combining CoT reasoning with VQA can improve the accuracy and reasoning abilities of state-of-the-art models, such as GPT-4.

- The motivation stems from the limitations in existing LLMs, which, while powerful, still exhibit significant room for improvement in complex reasoning tasks, especially those that span multiple modalities.

- Methodology:

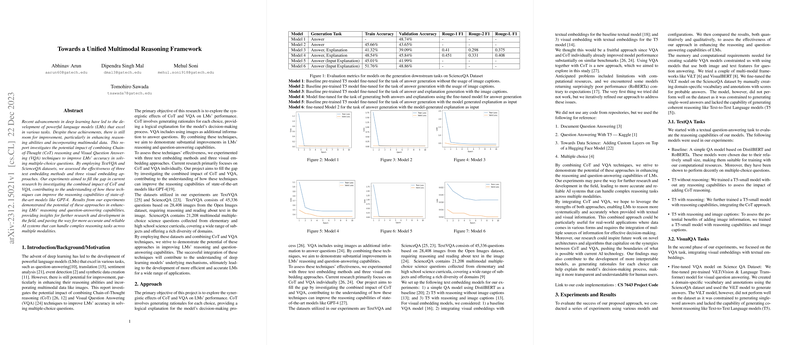

- The authors employed datasets specifically designed for evaluating multi-modal reasoning: TextVQA and ScienceQA. These datasets are crucial for assessing how well the combined strategies perform in realistic and diverse contexts.

- The research involved evaluating three different text embedding methods and three visual embedding approaches. This multi-faceted evaluation helps in understanding the various ways in which text and visual data can be effectively combined to enhance model performance.

- Experiments and Results:

- The experiments conducted demonstrated significant improvements in reasoning and question-answering tasks when CoT reasoning was combined with VQA techniques.

- Notably, the integration of these modalities showed promising results in solving multiple-choice questions, a crucial aspect of testing the reasoning ability of LMs.

- The findings highlight that such a unified framework not only boosts accuracy but also enhances the reliability of AI systems in handling intricate reasoning tasks.

- Impact and Future Directions:

- The paper's results provide crucial insights for further research and development in the field of AI, particularly in creating more holistic and robust models capable of addressing complex, multi-modal challenges.

- It sets the stage for future exploration into optimizing embedding methods and refining the integration of reasoning frameworks to continually improve LM capabilities.

Conclusion

"Towards a Unified Multimodal Reasoning Framework" contributes significantly to the understanding and advancement of combining multimodal reasoning and question-answering strategies. By illustrating the potential improvements in reasoning capabilities through the integration of CoT and VQA, the paper paves the way for next-generation AI systems that are more accurate and reliable in addressing complex tasks across different data modalities.