Introduction

LLMs stand at the forefront of AI, demonstrating human-like proficiency in understanding and generating language. These models serve a range of purposes, from aiding in creative writing to summarizing extensive texts and planning activities, and even have the potential to pave the way towards artificial general intelligence (AGI). However, LLMs typically require vast amounts of data and extensive computing resources. While proprietary models like ChatGPT have made headlines, there's an ongoing effort to create open-source alternatives that could democratize access to this powerful technology. One significant limitation of existing LLMs is their focus on English, leaving a gap in performance for other languages such as Chinese.

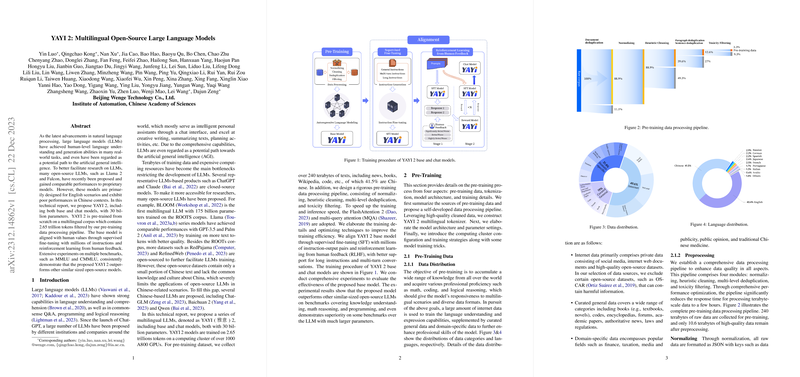

Pre-Training

YAYI 2 is the model under consideration, with both base and chat models of 30 billion parameters each, pre-trained on a corpus with multilingual content, which particularly improves performance in Chinese contexts. The developers curated a massive data set of over 240 terabytes, 41.5% of which is Chinese, sourced from diverse content such as news and Wikipedia. Special care was taken to design a rigorous processing pipeline, employing normalization, heuristic cleaning, multi-level deduplication, and toxicity filtering to ensure data quality and safe outputs from the models. Advanced techniques like FlashAttention 2 and multi-query attention were used to increase the speed of training and inference.

Alignment

To fine-tune the base models, a process involving millions of instruction-output pairs and reinforcement learning from human feedback was employed. This was crucial to imbue the models with the ability to handle long instructions and multi-turn conversations. The training data for this aligned fine-tuning covered a vast array of tasks, evaluated based on several dimensions, stressing balance and high quality. Additionally, the model is designed to handle various domain tasks, assisting the model's efficacy in real-world business scenarios.

Evaluations

The YAYI 2 base model benchmarking reveals it outperforms several similar-sized open-source models across standard benchmarks for knowledge and language understanding, mathematical reasoning, and programming. Particularly noteworthy is its performance on benchmarks involving multilingual capabilities and understanding contextually relevant information. While the YAYI 2 model demonstrates remarkable capabilities in the creation and usage of language, users are cautioned to review its outputs, especially in sensitive scenarios, to avoid the propagation of potentially harmful content.

In conclusion, YAYI 2 is a multilingual, open-source LLM that offers significant advancements over its open-source counterparts, especially in the Chinese language context. The model was trained using innovative techniques for efficiency and human-like understanding, and it performed impressively in benchmarks that test a variety of capabilities essential to AGI.