An Overview of the YuLan Open-source LLM

The paper "YuLan: An Open-source LLM" presents the development and evaluation of YuLan, a suite of open-source LLMs consisting of 12 billion parameters. This research was conducted by a team at Renmin University of China, focusing on enhancing the model's capabilities through a comprehensive pre-training and fine-tuning strategy.

Key Contributions

1. Pre-training on Multilingual Data

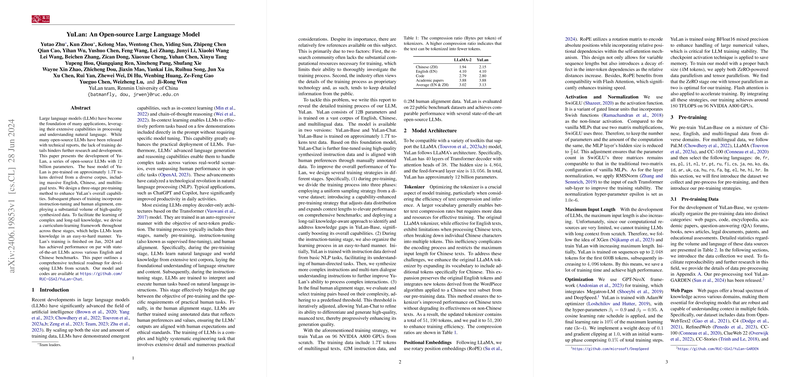

YuLan's foundational model is pre-trained on a vast dataset comprising approximately 1.7 trillion tokens. These tokens are derived from a diverse corpus that includes English, Chinese, and other multilingual texts. The data sources are extensive, including web pages, code repositories, encyclopedias, academic papers, and various domain-specific documents.

2. Three-stage Pre-training Method

The pre-training process is structured into three distinct stages:

- Standard Pre-training: Utilizes a next-token prediction approach with a diverse dataset mix.

- Capability-Enhanced Pre-training: Incorporates educational assessments to boost performance on complex benchmarks like MMLU and C-Eval.

- Long-tail Knowledge-Aware Pre-training: Focuses on identifying and filling knowledge gaps by synthesizing targeted question-answer pairs.

3. Comprehensive Model Architecture and Optimization

YuLan implements a Transformer-based architecture similar to LLaMA, featuring enhancements like an optimized tokenizer, rotary position embeddings, and RMSNorm for stability. The training employs sophisticated strategies including data and tensor parallelism, enabling efficient use of 96 NVIDIA A800 GPUs.

4. Instruction-Tuning and Human Alignment

YuLan undergoes supervised fine-tuning (instruction-tuning) and further human alignment to adapt to human-like tasks:

- Curriculum Instruction-Tuning: Gradually transitions from simple to complex tasks using a synthesized dataset of over 41 million instructions.

- Human Alignment: Implements a difficulty-based curriculum leveraging a reward function to ensure better alignment with human preferences.

Evaluation and Results

YuLan is benchmarked across a range of tasks in areas such as commonsense reasoning, factual knowledge retrieval, reading comprehension, and mathematical reasoning. It demonstrates performance comparable to state-of-the-art LLMs on 22 public benchmarks.

- Reasoning Tasks: Achieves significant accuracy on datasets like BoolQ and CommonsenseQA.

- Language Understanding: Performs competitively on challenging datasets like MMLU and C-Eval, with robust capabilities in handling both English and Chinese.

- Alignment: Excels in alignment benchmarks, showcasing effective human-aligned responses in both English and Chinese.

Implications and Future Directions

YuLan's development offers a valuable roadmap for building and improving large-scale LLMs. Its open-source nature and comprehensive training details provide a foundation for further research, potentially aiding advancements in AI capabilities. Future research could explore refinements in long-tail knowledge integration and cross-lingual transfer learning to enhance performance further.

The release of YuLan and its accessible technical report contributes to the ongoing discourse around transparency and reproducibility in AI, encouraging collaborative progress in the field of natural language processing.