An Insightful Overview of WaveCoder: Enhanced Instruction Tuning with Refined Data Generation

The recent publication titled "WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation" presents a significant contribution to the domain of Code LLMs. Authored by experts from Microsoft, the paper aims to address two perennial issues in instruction tuning: data duplication and insufficient data quality control. By introducing a novel LLM-based Generator-Discriminator framework, the authors propose a sophisticated methodology to generate high-quality, diverse instruction data specifically designed for code-related tasks. This work is crystallized in the development of the WaveCoder model and the CodeOcean dataset.

Methodological Innovations

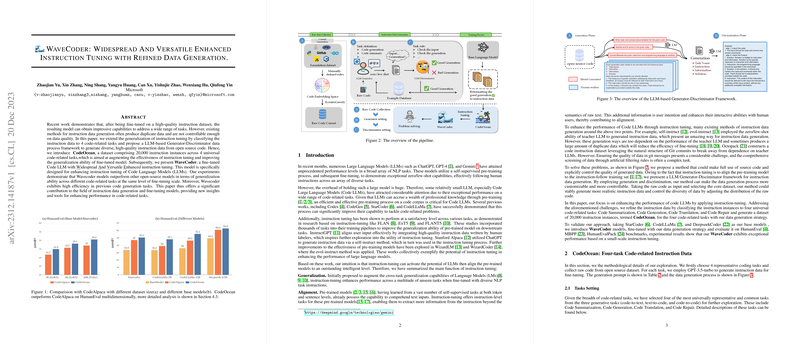

The authors delineate their approach as an extension to existing methodologies by implementing a multi-step data generation process. The instruction data is first categorized into four high-level code-related tasks: Code Summarization, Code Generation, Code Translation, and Code Repair. This classification ensures the coverage of diverse programming scenarios while maintaining the quality and specificity of the data.

Central to their innovation is the Generator-Discriminator framework. Here, a LLM generator produces instructional data, which is then scrutinized by a discriminator. This discriminator is not a conventional binary classifier; rather, it follows a step-by-step rule-based examination to filter low-quality data and refine high-quality data. This dual-faceted approach enhances the data quality without overly relying on the capabilities of the teacher LLM.

Dataset and Instruction Data

The paper introduces CodeOcean, a dataset comprising 20,000 refined instruction instances spanning the aforementioned four tasks. The dataset is derived from the formidable CodeSearchNet corpus using a series of manual filtering rules and the KCenterGreedy algorithm to maximize diversity. This meticulous selection and curation process result in a set of highly diverse and high-quality instructional data, crucial for effective instruction tuning.

Experimental Results

Experiments were performed using various base models, including StarCoder, CodeLLaMa, and DeepseekCoder. These models were fine-tuned with the CodeOcean dataset. The resulting WaveCoder variants demonstrated superior performance in generalization across multiple code-related tasks.

Key numerical results from the paper include:

- WaveCoder models achieved an impressive pass@1 score on the HumanEval benchmark, significantly outperforming other open-code models.

- On the HumanEvalFix benchmark, which evaluates code repair tasks, WaveCoder-SC-15B achieved an average pass@1 of 33.0, while WaveCoder-DS-6.7B closely approached GPT-4's performance.

- For code summarization tasks evaluated through the HumanEvalExplain benchmark, WaveCoder models demonstrated superior performance, consistently outperforming other models such as WizardCoder and OctoCoder.

These results highlight the effectiveness of the refined instruction data in enhancing model performance across multiple dimensions.

Implications and Future Work

The practical implications of this research are substantial. By refining the data generation process and employing a multi-task framework, the resulting models exhibit strong generalization capabilities without a significant tradeoff in performance for specific tasks. This positions WaveCoder as a highly versatile tool for a wide range of code-related applications, from automated code documentation to bug fixing and language translation.

Theoretically, this research underscores the importance of data quality and diversity in instruction tuning. The use of a discriminator to iteratively refine data provides a new avenue for researchers aiming to optimize training datasets for specialized LLM applications.

Future developments could explore enhancing the interplay among different tasks, potentially increasing the dataset size and further improving the efficiency of instruction tuning. There's also scope for investigating the integration of more sophisticated filtering criteria and the automated adaptation of the discriminator to different types of datasets or tasks.

Conclusion

In summary, the research on WaveCoder and the CodeOcean dataset provides new insights and tools for improving the efficacy and generalization of Code LLMs. The proposed LLM-based Generator-Discriminator framework marks a significant methodical advance in the generation of high-quality instructional data, significantly benefiting the instruction tuning process. This work lays an important foundation for future research aimed at further enhancing the capabilities and applications of LLMs in coding and beyond.