An In-Depth Analysis of Empowering Code Instruction Tuning with High-Quality Data

The critical inquiry at the heart of "How Do Your Code LLMs Perform? Empowering Code Instruction Tuning with High-Quality Data" focuses on the efficacy of data quality in refining code instruction tuned models. While many existing datasets for code instruction claim high performance based on benchmarks like HumanEval, this paper reveals an underlying issue of data leakage affecting these claims. The authors systematically investigate and clarify the properties defining high-quality code instruction datasets, offering novel solutions to mitigate data leakage and improve model performance.

Key Findings and Contributions

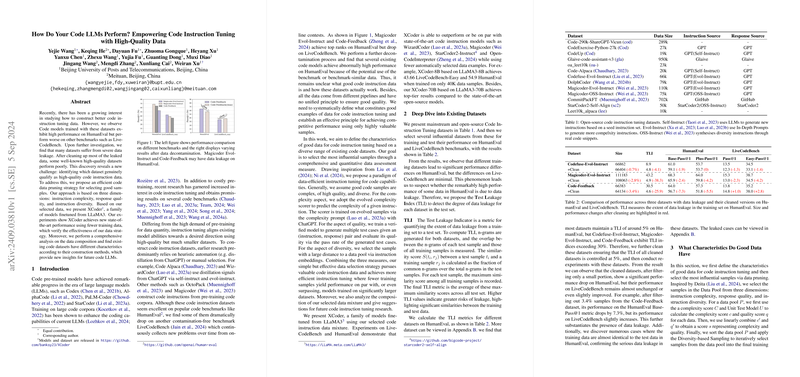

The paper demonstrates that prevalent code instruction datasets, despite seemingly stellar results on benchmarks such as HumanEval, often suffer from severe data leakage. This leakage severely undermines the validity of these benchmarks, as high performance may be the result of inadvertently learning from test data rather than genuinely solving novel problems. Specifically, datasets such as Magicoder Evol-Instruct and Code-Feedback achieve top ranks on HumanEval but drop significantly on the contamination-free LiveCodeBench benchmark, indicating the presence of data contamination.

To address this, the authors propose a novel data pruning strategy grounded in three dimensions:

- Instruction Complexity: Enhanced by an evolved complexity scorer trained on evolved samples.

- Response Quality: Evaluated via a unit test model that generates multiple test cases to assess the quality of the provided solutions.

- Instruction Diversity: Ensured through embedding-based sampling to maintain a wide range of instruction types.

These principles culminate in the creation of XCoder, a family of models finetuned from LLaMA3 on carefully curated datasets. XCoder outperforms existing models with fewer data examples, establishing its derived data pruning strategy as highly effective.

Deep Dive into Data Pruning Strategy

The devised strategy couples complexity scoring with quality assessment and diversity-based sampling to select the most impactful data samples:

- Complexity Scorer: This scorer estimates the complexity of instructions by training on a dataset evolved through multiple rounds of intricate prompting, providing a quantified measure of instruction difficulty.

- Unit Test Model: This model validates the response quality by generating and executing test cases, thus ensuring the responses are not only syntactically but also functionally accurate.

- Diversity-Based Sampling: Ensures the selected dataset captures a wide spectrum of instruction types, enhancing the model's robustness and generalizability.

Results and Implications

Experiments conducted on LLaMA3-8B-Base and LLaMA3-70B-Base models illustrate that XCoder consistently outperforms baseline datasets on both HumanEval and LiveCodeBench benchmarks. The data scaling experiments reveal that XCoder's method achieves comparable or superior performance using significantly fewer data samples, underscoring the effectiveness of the proposed data selection strategy.

This work has profound implications:

- Practicality: By demonstrating that fewer, high-quality data samples can achieve better performance, this approach reduces the computational burden and costs associated with training large models.

- Robustness and Fairness: By addressing data leakage, the research ensures fairer and more robust evaluations, providing a clearer benchmark for future research.

Future Directions

This paper opens several avenues for further research:

- Generalizability Across Model Bases: While initial results using LLaMA3 are promising, future work should explore the applicability of these strategies across different model architectures and bases.

- Broader Task Inclusion: Expanding the scope beyond code generation to include tasks like code debugging, refactoring, and interpretation can further validate and refine the proposed data selection strategy.

- Real-World Application and Ethical Considerations: Ensuring the models do not produce harmful or unethical outputs remains a substantial area for continued development.

In conclusion, the paper by Wang et al. presents a rigorous, analytical approach to refining code instruction tuning through high-quality data selection. It emphasizes the importance of addressing data leakage and lays out a clear framework for optimizing performance through strategic data pruning, thereby setting a new benchmark in the development and assessment of code-focused LLMs.