Overview of "EQ-Bench: An Emotional Intelligence Benchmark for LLMs"

The paper, "EQ-Bench: An Emotional Intelligence Benchmark for LLMs," authored by Samuel J. Paech, introduces EQ-Bench—a novel benchmark specifically designed to evaluate emotional intelligence (EI) in LLMs. The primary focus is on emotional understanding (EU), a branch of EI defined as the ability to comprehend and interpret complex emotions within social interactions.

Motivation and Methodological Advancements

Within the field of LLM evaluation, existing benchmarks primarily assess general knowledge or specific abilities such as coding. However, none target emotional comprehension, despite its relevance to human-like interaction. EQ-Bench aims to fill this gap by offering a targeted assessment of EU, utilizing dialogues crafted to reflect emotionally charged situations.

Key improvements over prior benchmarks, particularly SECEU, include:

- Reference Answers by Authors: Unlike SECEU, which uses crowd-sourced answers, EQ-Bench features reference answers determined by the authors to avoid potential bias.

- Complex Dialogue Scenarios: The questions are based on dialogues generated by GPT-4 that depict scenes of tension or conflict, thus providing a nuanced basis for assessing EU.

- Removal of Summation Constraint: SECEU's requirement for emotion intensity ratings to sum to ten was omitted, allowing a more intuitive approach to assessing emotional context.

- Diverse Emotions Selection: Instead of selecting plausible emotions, a range of emotions is chosen to minimize ambiguity.

Methodology

The benchmark employs questions that necessitate LLMs to rate emotional intensity on a scale of 0-10 for four emotions at the conclusion of a dialogue. This structure facilitates objective scoring by measuring divergence from predetermined reference answers.

The paper emphasizes a comprehensive testing pipeline designed for automated benchmarking, available open-source for further community engagement.

Results

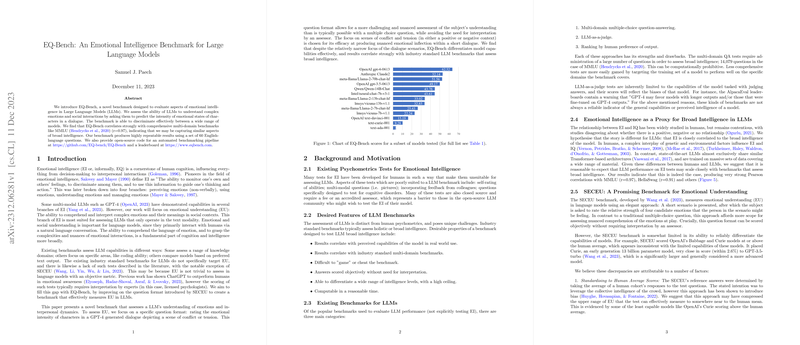

The results reveal EQ-Bench's ability to discern differences across models. For instance, OpenAI's GPT-4 exhibits the highest EQ-Bench score, outperforming prominent models such as Anthropic Claude2 and Meta's Llama variants. Open-source models like SynthIA-70B also show strong performance, indicating the growing capabilities of community-driven models.

Importantly, EQ-Bench scores correlate strongly with established benchmarks like MMLU (r=0.97), suggesting that EU is a viable proxy for assessing broad intelligence in LLMs. Despite methodological similarities, a weaker correlation exists with SECEU, highlighting EQ-Bench's distinct approach and improved scoring distribution.

Limitations and Future Directions

While EQ-Bench provides insightful assessments, the inherent subjectivity of emotional interpretation poses challenges. Future work may involve refining questions with expert input and potentially establishing human baseline scores. Additionally, bias from GPT-4 generated dialogues could be mitigated with human-authored scenarios.

The benchmark's openness allows for transparent adaptation as LLM capabilities evolve, with ongoing adjustments anticipated to maintain its relevance and robustness against targeted overfitting in fine-tuning practices.

Conclusion

EQ-Bench represents a significant step towards nuanced assessments of LLMs, addressing an essential aspect of interaction—emotional intelligence. By providing a rigorous proxy for general intelligence evaluation, it offers both practical and theoretical insights into the interplay between emotional and broad intelligence within machine learning frameworks.