Insights into MVBench: A Benchmark for Multi-Modal Video Understanding

The paper entitled "MVBench: A Comprehensive Multi-modal Video Understanding Benchmark" presents an in-depth exploration of the limitations of current multi-modal LLMs (MLLMs) and proposes an innovative benchmark designed to address existing deficiencies in video understanding tasks.

Overview of MVBench

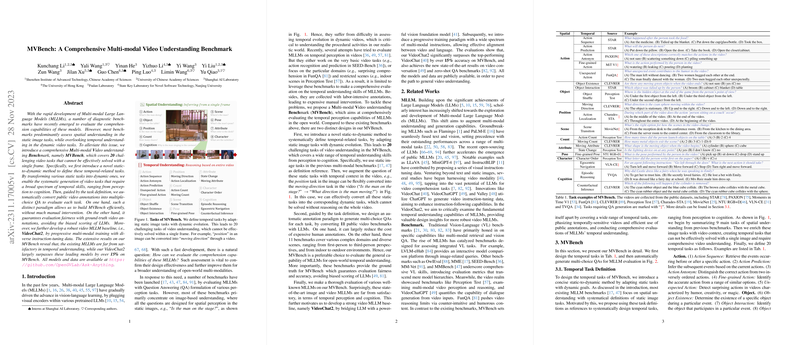

The development of MVBench is driven by the realization that current diagnostic benchmarks fall short in evaluating the temporal comprehension capabilities of MLLMs. Where traditional benchmarks concentrate predominantly on static image-based tasks, MVBench innovatively transitions these tasks to a dynamic video context. This shift introduces 20 video tasks encompassing a wide array of temporal skills, encompassing both perception and cognition. A distinctive static-to-dynamic method enables precise transformation of image tasks into video tasks, providing a more comprehensive challenge that extends beyond mere frame-based analysis.

Automatic QA Conversion and Evaluation Paradigm

An important aspect of the MVBench methodology is the automated conversion of existing public video annotations into a multiple-choice question-answering format. This automation minimizes manual intervention and ensures ambiguity-free evaluation leveraging ground truth video annotations. The use of a robust system prompt alongside a simplified answer prompt ensures precision in responses and maximizes the evaluation's robustness and fairness.

Video MLLM Baseline: VideoChat2

Amidst the observed inadequacies in existing MLLMs, especially regarding temporal understanding, the paper introduces VideoChat2. This baseline employs progressive multi-modal training strategies to improve temporal performance significantly. VideoChat2 aligns video attributes with LLMs through a novel architecture that integrates vision encoders and LLMs with a streamlined QFormer. This model boasts a considerable improvement, surpassing leading models by over 15% on MVBench.

Results and Implications

The evaluations conducted on MVBench reveal crucial insights into present video MLLM capabilities. Surprisingly, many top-performing models lag in tasks necessitating temporal reasoning. VideoChat2 addresses these deficiencies effectively by providing a significant leap in performance, particularly in action, object, scene, pose, and attribute tasks. However, there remain challenges, especially in tasks related to position, count, and character recognition.

The findings suggest substantial implications for future MLLM development. Attention should be directed towards enhancing grounding and reasoning capabilities and exploring deeper integration of multi-modal data. The presented results posit that future advancements could focus on additional modalities such as depth and audio, further augmenting video comprehension.

Future Directions

The framework provided by MVBench paves the way for comprehensive evaluation and development of MLLMs capable of nuanced temporal understanding. There remains a vast potential for innovation in video-based AI models, including refining data annotations and extending the range of evaluation strategies. As research continues, MVBench will likely play an essential role in the progression toward more sophisticated, generalized video understanding models.

Overall, the paper provides a foundational contribution to the field of multi-modal AI by realigning evaluation benchmarks with the dynamic realities of video content. As AI continues to evolve, benchmarks like MVBench will be critical in guiding the design and training of next-generation video understanding models.