LLMs in Finance: A Survey

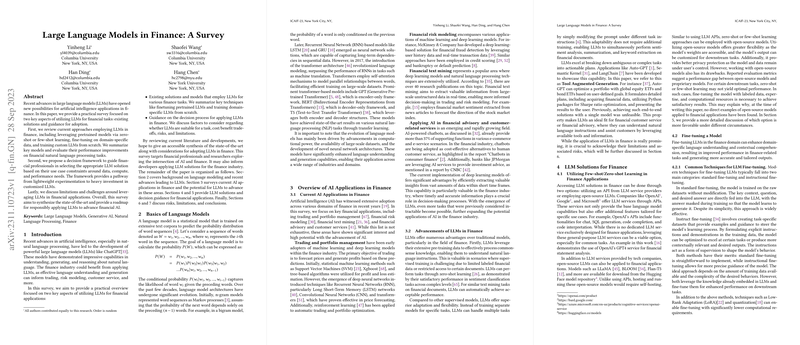

The paper "LLMs in Finance: A Survey" meticulously examines the deployment of LLMs in the finance industry, focusing on existing methodologies and guiding strategies for adoption within financial tasks. This piece contributes significantly to the understanding of how LLMs can transform financial operations, providing both an overview of their current applications and a decision-making framework for practitioners.

Survey Insights

The survey identifies key approaches used in leveraging LLMs for finance, including:

- Pretrained Models: Utilization of models like GPT-3 and BERT through zero-shot or few-shot learning, enabling financial tasks without extensive labeled datasets.

- Fine-Tuning: Customizing pretrained models on domain-specific data to enhance performance in finance-specific applications, shown to outperform general-purpose LLMs in many classification tasks.

- Developing Custom LLMs:

- Training from Scratch: This approach, illustrated by examples like BloombergGPT, utilizes significant computational resources and is trained on large financial datasets alongside public data to improve accuracy and generalization on finance-specific tasks.

Models and Performance

The paper highlights the advent of specialized LLMs in finance such as FinMA, FinGPT, and BloombergGPT, which have showcased improved results in sentiment analysis, news summarization, and question answering over general LLMs. These models have shown their efficacy by leveraging domain-specific datasets or instruction-based fine-tuning, which significantly enhances their contextual comprehension in finance applications. The tabled data within the paper showcases the computational and resource commitments necessary for training these models, illustrating both their cost and time intensiveness.

Decision Framework for Deployment

An insightful decision framework for applying LLMs in financial applications is proposed, categorizing usage into four levels:

- Level 1 (Zero-shot/Few-shot Learning): Best for early exploratory applications and requires minimal computational resources.

- Level 2 (Tool-Augmented Generation): Utilizes external plugins or tools to enhance LLM capabilities in handling complex tasks.

- Level 3 (Fine-Tuning): Requires a robust dataset and computational resources for refining model accuracy and contextual understanding.

- Level 4 (Training from Scratch): The most cost-intensive, suited for developing highly specialized models with comprehensive domain functionality.

Considerations and Challenges

The paper doesn't shy away from acknowledging the inherent challenges associated with employing LLMs in finance, such as the risk of bias and disinformation. It stresses the need for sophisticated evaluation frameworks to judge model performance accurately across task-specific and general metrics, ensuring alignment with the nuanced needs of financial institutions.

Additionally, issues of data privacy are particularly accentuated when deciding between open-source models and third-party services, with privacy concerns advocating for in-house solutions where data security is paramount.

Implications for the Future

As computational capabilities and available datasets continue to grow, the potential for LLMs to further revolutionize the finance sector is substantial. The trajectory envisioned by the paper suggests a more democratized access to advanced NLP tools within finance, driving innovation across automated trading, risk assessment, and personalized financial advisories.

In conclusion, the survey provides a comprehensive roadmap for strategically leveraging LLMs in finance, meticulously navigating between existing methodologies and future opportunities, to responsibly advance AI applications in this critical sector.