Comparative Analysis of Abstraction and Reasoning in Humans, GPT-4, and GPT-4V

The paper "Comparing Humans, GPT-4, and GPT-4V on Abstraction and Reasoning Tasks" by Melanie Mitchell, Alessandro B. Palmarini, and Arseny Moskvichev contributes to our understanding of the abstract reasoning capabilities of LLMs, particularly GPT-4 and its multimodal variant GPT-4V. Using the ConceptARC benchmark, the research critically examines these models' capacity to perform abstraction and reasoning, a fundamental cognitive ability often associated with human intelligence.

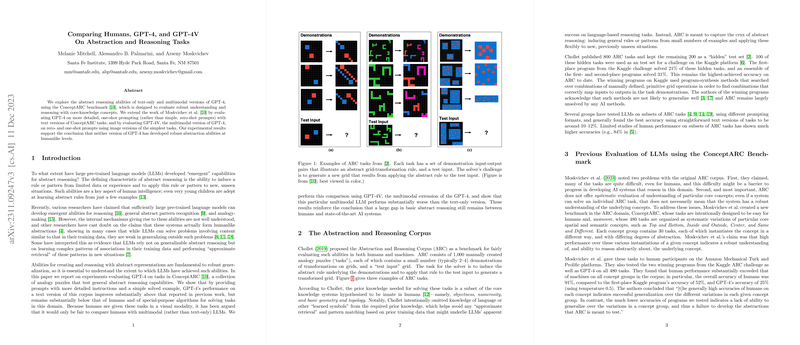

ConceptARC Benchmark

ConceptARC, a subset of the Abstraction and Reasoning Corpus (ARC) proposed by Chollet, is a tool for evaluating the understanding of core-knowledge concepts in a systematic fashion. It includes 480 tasks organized into groups based on distinct spatial and semantic concepts. Unlike ARC, which generally presents highly challenging problems, ConceptARC simplifies the domain with tasks meant to be more approachable for AI. This ensures that the focus remains on evaluating a model's grasp of abstract concepts rather than the complexity of the tasks.

Experimental Setup and Findings

The research explores two primary aspects: first, the evaluation of GPT-4 using an advanced one-shot prompt incorporating detailed instructions and examples; second, the performance of GPT-4V on visual representations of ConceptARC tasks. These experiments are pivotal due to prior limitations identified in Moskvichev et al.’s earlier work, wherein GPT-4 was tested under a simplistic zero-shot prompting mechanism.

- Text-Only GPT-4: The enriched one-shot prompting method improved performance from previous evaluations, demonstrating a marginal increase in task accuracy. Despite this, GPT-4's performance, with an accuracy of 33%, was significantly lower than human performance, which stood at 91% accuracy.

- Multimodal GPT-4V: On the minimal tasks of ConceptARC, GPT-4V’s performance dropped further, underscoring its limitations in processing and abstracting from visual inputs compared to text-only counterpart.

Implications of the Findings

The results underscore a critical gap in abstract reasoning between current LLMs and human cognition. While GPT-4 shows some improvement with enhanced prompting, it highlights that LLMs, even at the advanced stage of GPT-4, are not yet achieving human-like abstraction capabilities when dealing with novel concepts not present in prior training data. The GPT-4V results further illustrate the challenge in integrating multimodal inputs to enhance abstraction and reasoning tasks.

Theoretical and Practical Considerations

The paper is pivotal for understanding the constraints of LLM abstraction capabilities and emphasizes that advanced LLMs predominantly rely on patterned associations derived from extensive training datasets. Consequently, their limitation in abstract reasoning—particularly when tasked with new or unseen contexts—suggests an inclination towards memorization over genuine pattern induction.

Future Directions

Looking forward, this research invites further exploration into several areas including enhancing model architectures to better handle multimodal inputs, developing innovative prompting strategies, and creating comprehensive benchmarks reflective of diverse cognitive tasks. Moreover, studying the interplay between different core-knowledge concepts can illuminate underlying cognitive processes, potentially guiding new model evaluation frameworks.

In conclusion, the paper presented articulates a critical evaluation of current AI capabilities in abstract reasoning, providing a solid foundation for future advancement beyond current LLM limitations. The research pathway suggested advocates for a comprehensive refinement of methodologies that can bridge the abstraction capability gap between human and artificial intelligence.