Understanding the Performance of LLMs

LLMs are reshaping the landscape of artificial intelligence with their impressive generalization abilities across numerous tasks. As their size continues to balloon, optimizing the runtime performance during various stages—namely training, fine-tuning, and inference—becomes critical. This analysis dissects the performance of LLMs considering system optimizations and hardware capabilities, rendering insights into the efficiency of current frameworks and providing pointers for future enhancements.

Training LLMs: Techniques and Efficiency

Training an LLM involves significant computational efforts. The paper benchmarks the training performance across different model sizes (with billions of parameters) and investigates how individual optimization techniques contribute to system efficiency. Techniques like ZeRO, which employs memory optimization strategies; quantization, which streamlines model weights and activations; and FlashAttention, which enhances kernel compute efficiency, are put under the microscope. Interestingly, techniques like ZeRO perform well in conserving memory without compromising on training speed, while offloading techniques can hamstring training due to added CPU handling. FlashAttention emerges as both a speed booster and compatible with memory-saving methods, advocating for its use. Interestingly, the paper finds optimized systems may not fully utilize GPU resources, indicating room for enhancing performance efficiency.

Fine-Tuning LLMs: Balancing Efficiency and Costs

Once pre-trained, fine-tuning customizes LLMs for specific downstream tasks. The paper evaluates parameter-efficient fine-tuning (PEFT) methods such as LoRA, an adaptation strategy that focuses on a subset of model parameters, and QLoRA, which fine-tunes LLMs while employing quantization for efficient computation. LoRA considerably outpaced QLoRA in throughput, evidencing the trade-off between compute efficiency and additional operations introduced by quantization. In tandem with other techniques like FlashAttention and ZeRO, fine-tuning demonstrates increased throughput, highlighting the potential for method combinations to produce significant efficiency gains.

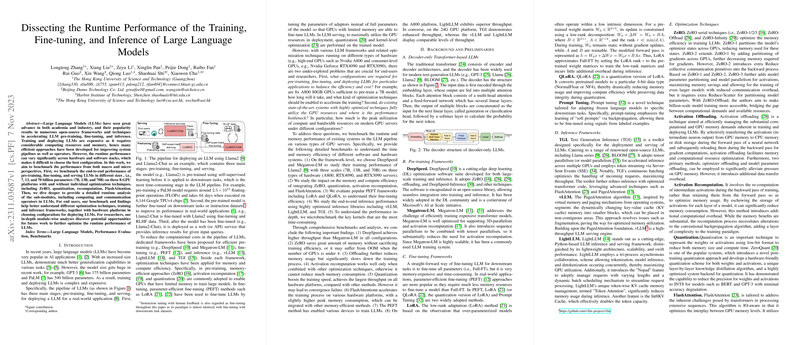

Inference Serving: Scaling for Real-World Application

Deploying fine-tuned LLMs, referred to here as "serving," requires models to effectively respond to end-user queries. Optimized inference libraries are crucial for this stage. By evaluating popular frameworks such as vLLM, LightLLM, and TGI across different hardware settings, the paper shows contrasting throughput efficiencies, with TGI excelling on standard GPU platforms and LightLLM leading on high-performance GPUs. Latency analysis further underscores the importance of hardware selection, with advanced platforms like A800 outshining consumer-level alternatives.

Future Directions and Opportunities

The findings from thorough benchmarking and detailed runtime analyses reveal both the expansion room and the avenues to optimize LLM runtime performance—valuable for both end-users and researchers. For end-users, these benchmarks guide the choice of configurations and frameworks best suited to deploying LLMs efficiently. For researchers, the uncovered potentials, especially regarding optimization techniques and GPU resource utilization, beckon further developments to minimize costs and maximize performance.

Conclusion

Navigating the challenges of LLM runtime performance demands a balance between computational costs and model efficiency. With smart optimization strategies and an acute understanding of hardware capabilities, it is possible to push the boundaries of what these sizable models can achieve even further. The benchmarks presented herein furnish the AI community with a concrete foundation for steering LLMs towards an optimized future.