Essay on "Knowledge Editing for LLMs: A Survey"

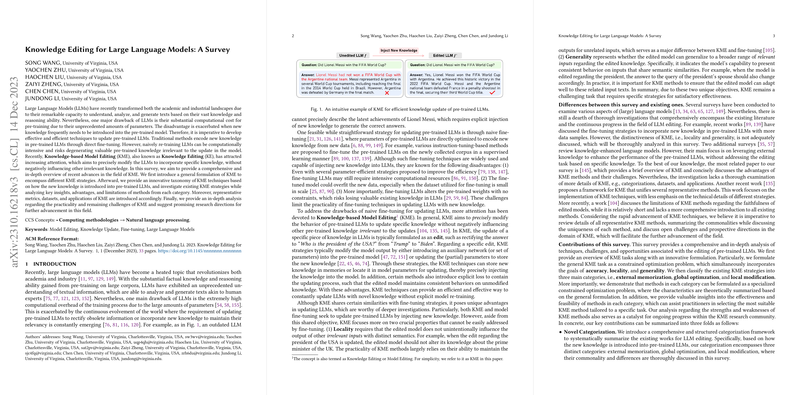

The survey "Knowledge Editing for LLMs: A Survey" addresses a critical challenge in the field of NLP: the ability to efficiently and precisely update LLMs with new knowledge. LLMs are transformative in the field of NLP, notable for their capacity to analyze and generate text akin to human experts. Their pre-training, however, is resource-intensive, and updating these models to encode new or corrected information frequently poses additional computational burdens. The survey presents a comprehensive overview of Knowledge-based Model Editing (KME) as a solution to efficiently introduce specific updates to LLMs while maintaining the integrity of existing knowledge.

Main Contributions

The survey systematically categorizes existing KME strategies into three primary methods based on how the knowledge is introduced into the LLMs: External Memorization, Global Optimization, and Local Modification.

- External Memorization leverages additional parameters or memory to store new knowledge without altering the model's pre-trained weights. This method achieves high scalability and minimal disruption to existing model knowledge, as additional memories or parameters can be easily adjusted to store an extensive range of edits. However, the challenge arises in memory management and ensuring efficient retrieval of stored knowledge.

- Global Optimization involves updating all model parameters through fine-tuning strategies that are constrained to minimize their impact on non-target knowledge. Though these methods can improve the generality and incorporate new knowledge effectively, they often suffer from computational inefficiencies due to the need to optimize numerous parameters. The survey notes that these methods focus on enhancing the generality of new knowledge incorporation.

- Local Modification seeks to precisely identify and update the specific parameters of the model that encode the knowledge needing change. This strategy can effectively maintain the locality of the model, as it ensures the preservation of original knowledge not pertinent to the update. However, this precision sometimes comes at the cost of reduced scalability.

Evaluation and Future Prospects

The survey delineates standard metrics for evaluating KME strategies: accuracy, locality, generality, retainability, and scalability. Emphasizing the need for a nuanced evaluation, these metrics help determine each method’s ability to incorporate new knowledge without compromising the existing information encoded in the model.

The implications of KME in practical and theoretical realms are substantial. Practically, KME offers a pathway to efficiently update LLMs, ensuring their relevance in dynamic real-world scenarios. Theoretically, KME introduces new paradigms for model adaptability and transfer learning. Future research directions suggested in the survey include balancing the trade-off between locality and generality, enhancing the theoretical understanding of KME processes, and developing methods that support large-scale, continuous edits.

The survey effectively captures the current landscape of KME strategies, providing a structured analysis of methodologies and future challenges. By doing so, it not only sheds light on the necessity of efficient model updating strategies but also sets the stage for innovations that could significantly reduce computational overheads while maintaining model accuracy—a vital advancement in the ongoing evolution of NLP technologies.