Overview of "Auto-Instruct: Automatic Instruction Generation and Ranking for Black-Box LLMs"

The paper titled "Auto-Instruct: Automatic Instruction Generation and Ranking for Black-Box LLMs," authored by Zhihan Zhang et al., introduces an innovative method called Auto-Instruct. This method aims to automate the generation and ranking of instructions for LLMs operating as black-boxes. The performance of LLMs significantly depends on the quality of instructions, and crafting such instructions manually can be both labor-intensive and subjective. This paper highlights the challenges associated with instruction generation for LLMs and proposes an automatic pipeline that leverages the generative capabilities of these models to optimize instruction design.

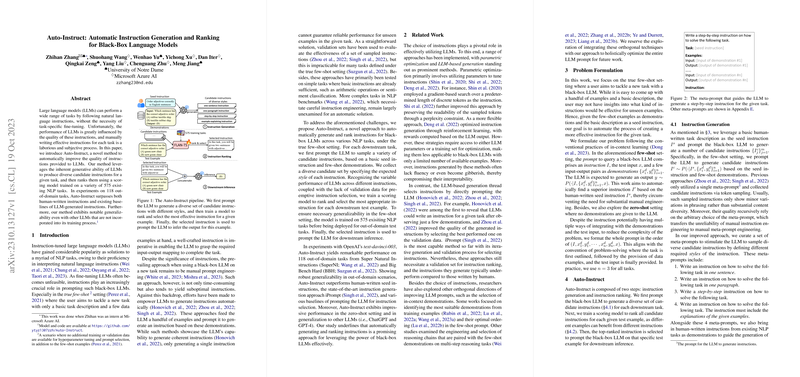

Auto-Instruct is presented as a two-step process involving instruction generation followed by instruction ranking. Firstly, the method employs the inherent generative properties of LLMs to generate a variety of potential instructions for a given task. Subsequently, a trained scoring model evaluates and ranks these instructions based on their effectiveness, gauged through the model's performance on a series of NLP tasks. The efficacy of Auto-Instruct is demonstrated through experiments conducted on 118 out-of-domain tasks. The results indicate that Auto-Instruct not only outperforms manually written instructions but also shows impressive generalizability across different models and settings.

Technical Approach and Methodology

The technical premise of Auto-Instruct revolves around leveraging the generative abilities of black-box LLMs to automate instruction creation. This is executed in two main phases:

- Instruction Generation: This phase utilizes style-specific meta-prompts, encouraging LLMs to produce a set of diverse candidate instructions. Each meta-prompt specifies different expected characteristics of the instruction, such as length and stage-wise details. The resultant is a broad spectrum of candidate instructions sourced via nucleus sampling. This approach is distinctly advantageous, allowing for the generation of instructions which might better suit various downstream applications, irrespective of the subjective inclinations embodied in manually authored instructions.

- Instruction Ranking: This essential phase employs a trained model based on FLAN-T5-Large to score and rank these candidate instructions by predicting their potential downstream performance. The scoring model is trained using a dataset spanning 575 different NLP tasks, ensuring robustness and generalizability across tasks not present in the training data. The training involves aligning the predicted scores with actual performance metrics using a list-wise loss.

Results and Implications

In evaluating Auto-Instruct, the researchers utilized two significant datasets: Super Natural Instructions (SuperNI) and Big Bench Hard (BBH). The effectiveness of Auto-Instruct was compared against several baselines, including human-written instructions, LM-based selections, and an on-the-fly instruction generation approach. Metrics such as ROUGE-L and accuracy were utilized for quantitative assessment:

- In few-shot settings, Auto-Instruct demonstrated a notable improvement over other methods, including a 6% relative improvement over human instructions on the SuperNI tasks.

- The approach also outperformed baselines in zero-shot settings and exhibited impressive generalizability to different LLMs, including GPT-4 and ChatGPT, which were not involved in the instruction generation process.

Future Directions and Speculation

The paper opens intriguing avenues for future research in AI instruction generation. With its ability to reduce reliance on human input, Auto-Instruct could, theoretically, be scaled up to support complex and dynamic tasks that require context-sensitive adaptations of LLM capabilities. This automated approach could lay the groundwork for more advanced forms of AI interaction, where tasks can be defined and modified dynamically, adapting to user needs almost autonomously.

Researchers could further explore integrating Auto-Instruct with other advancements in AI, such as reinforcement learning frameworks, to enhance model adaptability and instruction efficacy. While the system currently shows robust generalizability across multiple NLP tasks, there is potential to improve upon this by embracing multi-lingual contexts or integrating domain-specific knowledge.

Conclusion

The Auto-Instruct method represents a significant stride in automating processes traditionally dependent on human expertise. By reducing the manual effort required to craft effective instructions, this research contributes to the broader goal of making LLMs more autonomously capable and accessible for complex problem-solving. The impact of this work is poised to resonate across fields reliant on NLP technologies, suggesting that with further enhancements, such automated systems could redefine user-AI interactions and task executions in growingly complex domains.