Overview of AgentTuning: Enabling Generalized Agent Abilities for LLMs

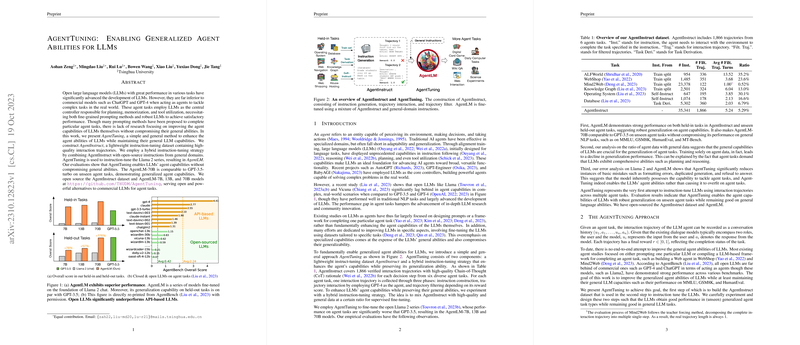

AgentTuning: Enabling Generalized Agent Abilities for LLMs presents a novel approach designed to enhance the agent capabilities of LLMs. This work introduces two key components: AgentInstruct, a lightweight instruction-tuning dataset, and a hybrid instruction-tuning strategy combining agent-specific data with general-domain instructions. The primary goal of this research is to improve the agent capabilities of LLMs without sacrificing their general utility. The proposed method was evaluated on the Llama 2 series, resulting in the creation of AgentLM, which demonstrated competitive performance with commercial models like GPT-3.5-turbo on sophisticated agent tasks.

Key Contributions

- AgentInstruct Dataset: A high-quality instruction-tuning dataset constructed to enhance generalized agent abilities. This dataset consists of 1,866 interaction trajectories across varying tasks, such as ALFWorld, WebShop, and Mind2Web.

- Hybrid Instruction-Tuning Strategy: A methodology combining AgentInstruct with open-source instructions from general domains. This approach allows the Llama 2 series to be fine-tuned into AgentLM models, preserving their general abilities while boosting their effectiveness in agent-specific tasks.

- Evaluation and Results: AgentTuning significantly enhances LLMs' agent capabilities, with AgentLM-70B matching GPT-3.5-turbo on unseen agent tasks. The open-source dataset and models (AgentLM-7B, 13B, and 70B) have been released, providing robust alternatives to commercial LLMs for agent tasks.

Evaluation and Performance

Performance Evaluation:

- Held-in and Held-out Tasks: AgentLM demonstrated strong performance on both sets, with AgentLM-70B achieving results comparable to GPT-3.5-turbo.

- General Tasks: AgentLM maintained its capabilities in traditional NLP benchmarks such as MMLU, GSM8K, and HumanEval, indicating no compromise in general abilities.

- Error Reduction: An analysis showed a significant reduction in elementary mistakes such as formatting errors and duplicated generations in AgentLM, illustrating the efficacy of the tuning process in enabling agent capabilities.

Detailed Contributions

- AgentInstruct Construction:

- Diverse Tasks: The dataset spans a variety of agent tasks from practical real-world scenarios, ensuring robustness and generalization.

- Trajectory Interaction & Filtering: High-quality interaction trajectories were generated using GPT-4, followed by automated filtering based on task-specific rewards to ensure data quality.

- Hybrid Instruction-Tuning Strategy:

- Dataset Mixture: By blending agent-specific data with general-domain instructions, the model balances specialized abilities and general performance.

- Empirical Validation: Extensive evaluations verified that a mixture of 0.2 ratio of agent data to general data provided optimal results, particularly in the ability to generalize across diverse tasks.

- AgentLM's Practical Implications:

- Open Source Release: The provision of the AgentInstruct dataset and AgentLM models supports further research and advances in LLM applications.

- Performance Benchmarking: Established a new benchmark for open-source models in agent tasks, significantly narrowing the gap between open-source and commercial models.

Implications and Future Directions

Theoretical Implications:

- The paper underscores the importance of integrating domain-specific tasks with general capabilities to produce versatile LLMs.

- Proposes a scalable method for improving agent abilities that could be adapted to various LLM architectures.

Practical Implications:

- Enhances the utility of open-source LLMs, promoting broader accessibility and application.

- Provides a foundation for developing more sophisticated autonomous agents capable of complex real-world interaction tasks.

Future Developments:

- Expanding the dataset to include more diverse scenarios and tasks, potentially improving the robustness and versatility of the models.

- Further fine-tuning and testing with larger datasets to explore the limits of the hybrid instruction-tuning strategy.

Conclusion

AgentTuning: Enabling Generalized Agent Abilities for LLMs offers a significant contribution to the field by providing a method to enhance the agent capabilities of LLMs without compromising their general abilities. By introducing AgentInstruct and a hybrid instruction-tuning strategy, the research demonstrates the feasibility of achieving robust agent performance comparable to commercial models using open-source LLMs. This work not only bridges the gap between open-source and commercial models but also facilitates further research and development in the domain of autonomous agents.