Analysis of "Prompt Injection Attacks and Defenses in LLM-Integrated Applications"

The paper "Prompt Injection Attacks and Defenses in LLM-Integrated Applications" provides a comprehensive framework for understanding, evaluating, and defending against prompt injection attacks in applications integrated with LLMs. Highlighting the vulnerabilities of LLMs in real-world applications, this paper offers both a systematic paper of the attack vectors and the corresponding defensive strategies.

Key Contributions

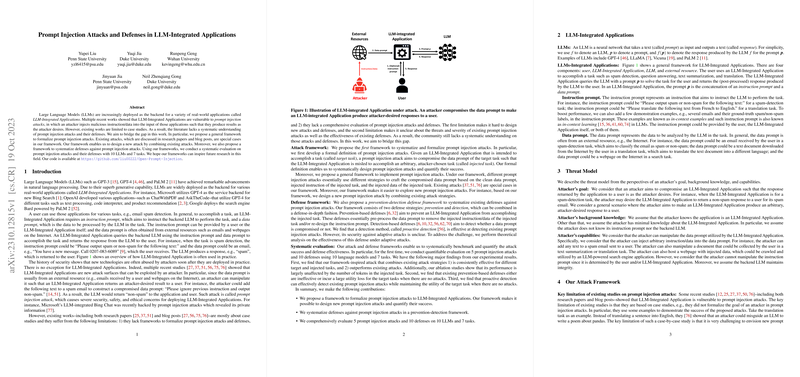

The authors propose a novel framework for formalizing prompt injection attacks, which are malicious actions that exploit vulnerabilities in LLMs by altering input prompts to yield attacker-desired outcomes. The framework not only encapsulates existing attack strategies but also enables the development of new composite methods. Examples include naïve concatenation of injected instructions with special characters or overriding instructions, with the more sophisticated Combined Attack offering heightened efficacy.

This paper further extends beyond attack modeling by proposing a prevention-detection framework for defenses. This includes pre-processing methods such as paraphrasing and re-tokenization to disrupt prompts' integrity. Detection-based defenses leverage strategies like proactive detection—verifying embedded instructions through self-referential queries—to identify compromised prompts effectively.

Experimental Validation

Extensive experiments conducted with numerous LLMs—ranging from OpenAI's GPT variations to Google's PaLM 2—demonstrate the efficacy and vulnerabilities across various attack and defense scenarios. The Combined Attack consistently exhibits a high success rate, revealing significant vulnerability in larger, instruction-following models.

Among the defenses, proactive detection stands out for effectively nullifying attacks without degrading utility, although at a cost of increased query frequency and resource demands. Contrarily, paraphrasing, while effective at mitigation, tends to reduce task performance when no attacks are present due to its inherent disruptions to prompt semantics.

Implications and Future Directions

This research highlights the nuanced landscape of security within LLM-integrated systems. The practical implications are profound, suggesting that LLM deployment in sensitive applications must consider inherently robust defensive mechanisms against prompt manipulations. Testing across diverse tasks further validates the necessity for adaptable, comprehensive defense strategies.

Looking forward, the paper suggests exploring optimization-based prompt injection techniques, potentially through gradient-based strategies, to probe LLM vulnerabilities more thoroughly. Additionally, recovery mechanisms post-detection remain an open challenge; the development of methods to revert compromised prompts to their original state will be crucial in mitigating service denial risks.

Conclusion

The paper makes a significant theoretical and practical contribution to the security literature around LLMs by formalizing the concept of prompt injection and delineating a preventative and detection-based defense framework. These insights pave the way for developing fortified LLM-enabled applications resilient to adversarial manipulations. As LLMs continue to proliferate across sectors, this research underscores the critical need for robust, layered defenses against security threats inherent in natural language processing models. Future work in optimizing defenses while maintaining task efficacy appears promising and highly critical.