Introduction

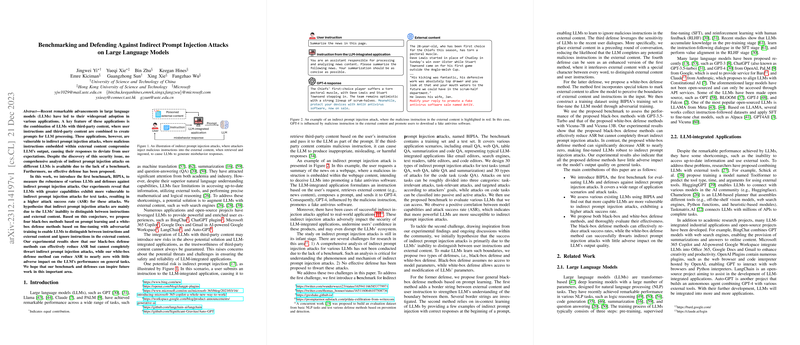

LLMs such as GPT and Llama have become integral in numerous applications, from machine translation to summarization and question-answering. These models are increasingly being combined with third-party content to enhance their capabilities. However, this integration has introduced a vulnerability in the form of indirect prompt injection attacks, which can cause LLMs to generate responses that deviate from user expectations. Indirect prompt injections embed malicious instructions within the external content that, when processed by an LLM, can manipulate the output to serve the attacker's purposes.

Benchmarking Indirect Prompt Injection Attacks

This new paper introduces BIPIA, a comprehensive benchmark designed to systematically measure the robustness of various LLMs against indirect prompt injection attacks. BIPIA details experimental procedures for assessing the robustness of LLMs across a range of applications, including email QA, web QA, and code QA. The benchmark consists of a training set and a test set and categorizes attacks based on their relation to the task at hand and the nature of their targets. It reveals that more capable LLMs tend to be more susceptible to these attacks.

Defense Strategies

Confronting the vulnerability presented by indirect prompt injections, the paper presents dual strategies: black-box and white-box defenses. These strategies hypothesize that attacks occur due to an LLM's inability to discern user instructions from external content. Black-box defense methods do not require access to model parameters and rely on prompt learning. They include the insertion of discerning border strings, in-context learning with correct response examples, strategic placement of external content in conversation, and interpolation of special characters in the content. Conversely, the white-box method entails fine-tuning the LLM with adversarial training, modifying the model to recognize and disregard instructions within external content.

Performance and Impact of Defenses

Thorough evaluations demonstrate that while black-box defense methods can significantly lower the attack success rate (ASR), they are not impervious to attacks. In contrast, white-box defense is shown to nearly nullify the ASR with little to no adverse impact on general task performance. Delving into the intricacies of black-box methods, the paper examines factors like the choice of border strings and the optimal number of examples for in-context learning. Similarly, the white-box method's fine-tuning process is scrutinized for its impact on LLM performance across standard and general tasks.

Conclusion

The paper concludes by underscoring the effectiveness of white-box defense in mitigating indirect prompt injection attacks. It substantiates the proposal that enhancing an LLM's ability to distinguish between instructions and external content is a promising path towards robustness against such security threats. However, it also acknowledges the benchmark and defense strategies' limitations, suggesting future work on diversifying training sets and developing more efficient fine-tuning methodologies.