QUIK: Towards End-to-end 4-Bit Inference on Generative LLMs

The paper investigates a significant aspect of contemporary AI research: the optimization of inference in LLMs through quantization. Observing the limitations of traditional weight-only quantization techniques in fully leveraging hardware capabilities during the inference of models from the GPT family, the researchers propose a novel method named QUIK. This method aims to perform accurate post-training quantization of both weights and activations to 4 bits, employing a hybrid approach.

Core Contributions

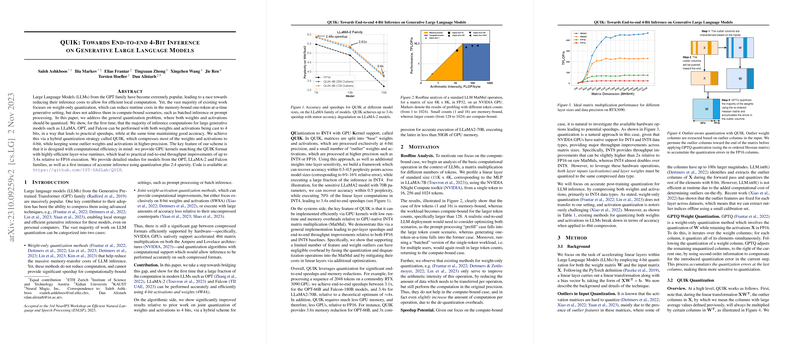

The authors provide a comprehensive exploration of the use of 4-bit quantization for both weights and activations in generative LLMs. The technical crux of their approach lies in a hybrid quantization scheme that strategically compresses weights and activations to 4 bits while retaining outlier elements in higher precision, such as INT8 or FP16. This is made feasible for practical execution by leveraging NVIDIA's GPU hardware architectures which natively support 4-bit computation, thus resulting in considerable speedups.

An integral part of the QUIK methodology is a custom GPU kernel design that aligns with the formatted data, offering layer-wise computations with highly efficient runtime. The implementation showcases practical throughput improvements, achieving an end-to-end speedup of up to 3.4 times over traditional FP16 execution.

Evaluation and Results

The authors perform rigorous experiments on a variety of LLMs, including OPT, LLaMA, and Falcon families, using the WikiText2 dataset, thereby demonstrating the efficacy of their method. The paper reports strong results with QUIK maintaining model accuracy within a mere 0.5 perplexity points of the full-precision baselines across different model sizes. Moreover, it highlights that smaller models like OPT-1.3B cannot maintain accuracy without considering outlier features, showcasing the importance of the hybrid quantization approach.

Numerically, QUIK exhibits remarkable speedups, especially in compute-bound execution scenarios pertinent to batch inference or prompt processing, compared to memory-bound single-token generative settings. The layer-wise analysis reveals that the QUIK method achieves speedups exceeding four times for large matrix operations on multiplying layers with INT4, including significant end-to-end throughput boosts on typical GPUs like the RTX 3090.

Theoretical and Practical Implications

The theoretical implications of this research underscore the value of incorporating both weight and activation quantization to curtail computational overheads effectively in LLMs. The work bridges the gap between compressed formats, hardware-supported accelerations, and practical model accuracy requirements. Practically, it signals a profound advancement by enabling efficient on-device model deployment, fostering a more democratized access to LLM technology by reducing the need for extensive computational resources.

Future Directions

Future research may follow various trajectories inspired by this paper:

- Broader Model Support: Extending QUIK to a wider variety of LLMs and potentially other generative models such as those leveraging transformers in computer vision and speech processing.

- Integration with Other Techniques: Exploring the combination of QUIK with speculative decoding or adaptive inference strategies to further mitigate latency in real-time applications.

- Dynamic Quantization: Investigating adaptive strategies in real-time quantization during live inference, which could enhance inference under fluctuating computational conditions.

- Sparse Quantization: Building on this work to concurrently employ sparse patterns with low-bit quantization to push the limits of model performance and inference cost savings even further.

Overall, QUIK contributes significantly in the domain of AI efficiency by presenting a tangible method for low-bit inference with minimal accuracy trade-offs, heralding new avenues to explore in the quest for efficient AI model deployment.