SmoothQuant: Accurate and Efficient Post-Training Quantization for LLMs

Quantization, an essential technique for reducing memory usage and accelerating inference, has encountered significant challenges when applied to LLMs. The paper "SmoothQuant: Accurate and Efficient Post-Training Quantization for LLMs" addresses these challenges by proposing a novel quantization approach that enables efficient 8-bit quantization of both weights and activations for LLMs, with minimal accuracy loss. This essay provides an expert overview of SmoothQuant, its methodology, experimental results, and implications for the field.

Quantization in the context of neural networks involves mapping high-precision values to lower-precision discrete levels. This reduction is particularly beneficial for LLMs like GPT-3 (175 billion parameters), which are notorious for their excessive memory and computational demands. However, quantizing LLMs is challenging due to the presence of activation outliers—large magnitude values that significantly distort quantization accuracy.

The SmoothQuant Approach

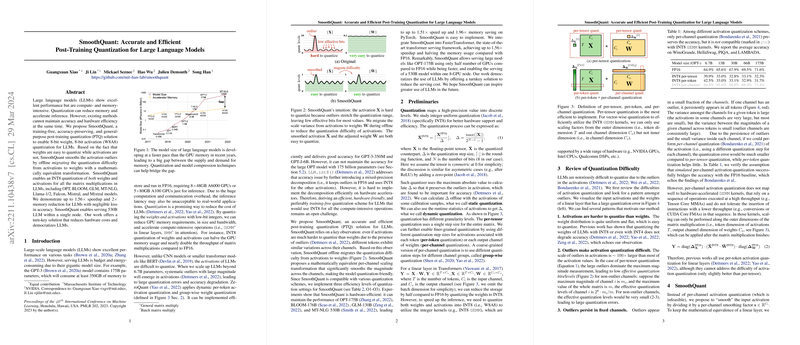

SmoothQuant offers a training-free, accuracy-preserving post-training quantization (PTQ) strategy aiming to resolve the aforementioned issues. The core idea hinges on the observation that weights are generally easier to quantize than activations. Hence, SmoothQuant smooths these activation outliers by migrating the quantization difficulty from activations to weights through an offline mathematically equivalent transformation. This smoothing process involves scaling down the activations and scaling up the weights in a compensatory manner, allowing the entire model to be more quantization-friendly.

Notably, the method introduces a hyperparameter to control the migration strength, ensuring a balance between the quantization difficulty of weights and activations. For the majority of models examined, strikes an optimal balance.

Experimental Results

The efficacy of SmoothQuant is demonstrated across several large-scale models, including but not limited to OPT-175B, BLOOM-176B, and GLM-130B. Key results from the experiments include:

- For the OPT-175B model, SmoothQuant achieves up to 1.56 speedup and nearly halves the memory usage, compared to the FP16 baseline, while fully preserving model accuracy across multiple benchmarks such as LAMBADA and HellaSwag.

- Tests on other large models, such as BLOOM-176B and GLM-130B, underscore SmoothQuant's ability to maintain floating-point accuracy post-quantization.

- For instruction-tuned LLMs like OPT-IML-30B and new architectures like LLaMA (including Llama-2, Falcon, Mistral, and Mixtral), SmoothQuant maintains notable accuracy preservation and efficiency gains.

Implications and Future Directions

The implications of SmoothQuant extend both practically and theoretically. From a practical perspective, the method significantly reduces the hardware and energy costs associated with serving LLMs, democratizing their usage across broader applications and smaller organizations. The reduction in memory usage, particularly beneficial for inference tasks where the entire model needs to be loaded into memory, can facilitate the deployment of even larger models, such as those with over 500 billion parameters, using limited hardware resources.

Theoretically, the paper opens avenues for exploring other quantization strategies and their balance through parameters similar to . The success of SmoothQuant hints at the potential gains from combining its principles with more advanced quantization techniques, including automated parameter tuning and integrating dynamic quantization schemes.

SmoothQuant also highlights the broader challenge of activation quantization in deep neural networks. Future research may further investigate per-channel quantization in more granular settings or explore novel neural architectures designed with inherent quantization-friendliness.

Conclusion

SmoothQuant represents a significant advance in the efficient and accurate quantization of LLMs. By smoothing activation outliers and balancing quantization difficulty between weights and activations, it maintains high performance while achieving substantial reductions in memory and computational requirements. This makes it a valuable contribution to the field of machine learning, particularly in the practical deployment of very large models. Future work could explore its integration with other optimization techniques and its application to new neural architectures.