Understanding Memory-GPT

The emergence of LLMs has marked a significant shift in the AI landscape. These models have been particularly instrumental in advancing natural language processing capabilities. However, one notable limitation of current LLMs is their fixed-length context windows, which restrict their ability to process long sequences of text or maintain a continuous thread in conversations. Addressing this limitation, a technique known as virtual context management has been proposed.

Virtual Context Management in LLMs

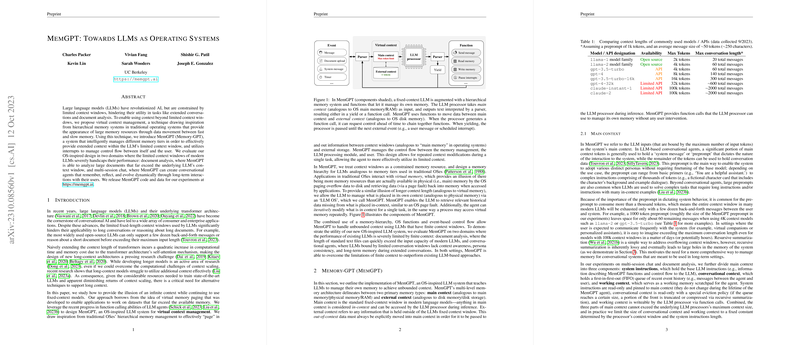

Virtual context management draws inspiration from hierarchical memory systems used in traditional operating systems. These systems effectively manage fast and slow memory tiers, allowing for a smooth computing experience despite the finite capacity of faster memories like RAM. A system called MemGPT applies this concept to LLMs, providing a way for them to handle extended contexts by intelligently managing different memory tiers. This technique offers promising improvements to LLMs, particularly in areas such as document analysis and multi-session chat—domains where LLMs have traditionally struggled due to limited context windows.

MemGPT: Expanding LLM Horizons

MemGPT operates by utilizing a hierarchy of memory allocations, similar to memory management in operating systems. The system consists of both a main context, akin to RAM, and an external context, which could be likened to hard disk storage. The main context is the fixed window available to the LLM processor, whereas external context contains out-of-window information. Clever function calls within MemGPT allow the LLM to manage and navigate its own memory, bringing relevant data into the main context as needed and pushing less relevant data to external storage.

One key advantage MemGPT brings to the table is the ability to maintain coherence and context over long interactions, as in extended conversations, without losing track of earlier portions that have rotated out of the immediate context window. Another is its capacity to analyze large documents by only bringing relevant sections into context, mimicking the ability of an operating system to manage a program's use of memory without overwhelming the processor.

Evolving LLMs with OS-Inspired Techniques

The significance of such a system cannot be overstated for tasks that demand an attention to extensive details. Document analysis, for instance, often involves referring to vast amounts of text, and conversational agents must recall details from earlier in the conversation to maintain coherence and user engagement. In both scenarios, existing LLM approaches were significantly hampered by finite context.

MemGPT's virtual context management, with its design rooted in operating system principles, offers a compelling advancement for LLMs. It not only grants them the semblance of a longer memory but also enables efficient utilization of that extended memory during tasks—allowing them to perform better on consistency and engagement metrics in dialogues and more adeptly handle the complexities of large documents. The innovative approach of MemGPT reaffirms that incorporating time-tested computing principles into modern AI systems can lead to substantial enhancements in their functionality.