Guiding LLM Math Reasoning with Planning Tokens

The paper "Guiding LLM Math Reasoning with Planning Tokens" introduces a novel approach for enhancing the mathematical reasoning capabilities of LLMs through the use of planning tokens. The authors address a notable limitation in existing LLMs: despite their proficiency in managing discrete reasoning steps, these models often exhibit inconsistency across an entire reasoning chain. This inconsistency undermines the ability of LLMs to perform complex reasoning tasks reliably.

Methodology and Approach

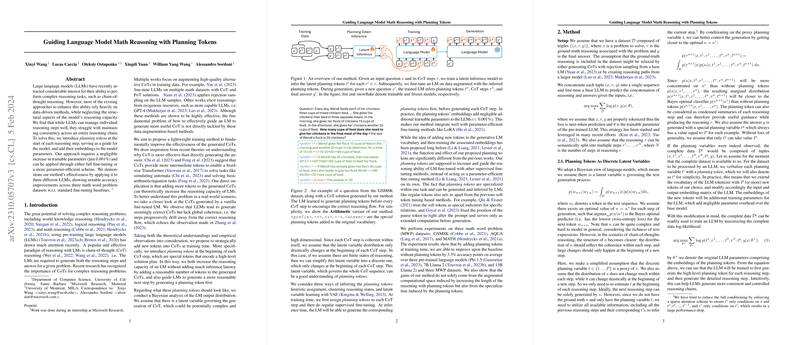

The proposed solution involves the introduction of planning tokens at the beginning of each reasoning step, which are embedded into the model parameters. These tokens act as guides that encapsulate high-level solution plans, thus aiding the model in maintaining coherence across multiple reasoning steps. The methodology is highlighted by its minimal increase in trainable parameters, which is only 0.001%, making it a computationally efficient enhancement.

The authors employ both conventional fine-tuning and parameter-efficient schemes to incorporate these planning tokens into various LLMs. The idea is grounded in recent theoretical advances that suggest adding intermediate tokens can enhance the reasoning capacity of transformers. By increasing the length of chain-of-thoughts (CoTs), the models acquire a greater capacity to resolve complex reasoning problems like those found in mathematics.

Experiments and Results

The experimental validation of the approach involves testing on three distinct math word problem datasets: GSM8K, AQUA, and MATH. Three LLMs are evaluated: Phi 1.5, Llama2 (7B), and Llama2 (13B). The introduction of planning tokens led to notable improvements in accuracy across all datasets and models in comparison to standard fine-tuning procedures. Specifically, the inclusion of planning tokens produced an average accuracy rise of 3.3 percentage points.

The results demonstrate that planning tokens enhance the ability of LLMs to solve math problems, with the greatest improvements observed in longer and more complex reasoning chains. The soft Q-VAE approach to inferring planning tokens consistently outperformed these more basic heuristic approaches, showcasing the advantage of learned planning specialization.

Implications and Future Work

The findings have practical implications for developing LLMs that are more robust and reliable in handling structured reasoning tasks, beyond simple information synthesis. The introduction of planning tokens could extend beyond mathematical reasoning tasks, potentially enhancing LLM performance in a variety of domains requiring coherent multi-step logic.

Future research may explore variants of planning tokens across different problem types or further develop the latent inference approach to obtain more expressive and accurate planning tokens. Additionally, exploring interpretability in the use of planning tokens might provide insights into the internal reasoning strategies of LLMs, facilitating a better understanding of how these models can mimic human-like reasoning processes.

In summary, the integration of planning tokens into LLMs presents a simple yet effective means of enhancing their reasoning capacities, with minimal computational overhead. This methodological shift guides the advancement of more cohesively thinking models, opening doors to broader applications of AI in logic-intensive domains.