Overview of Humanoid Agents: Platform for Simulating Human-like Generative Agents

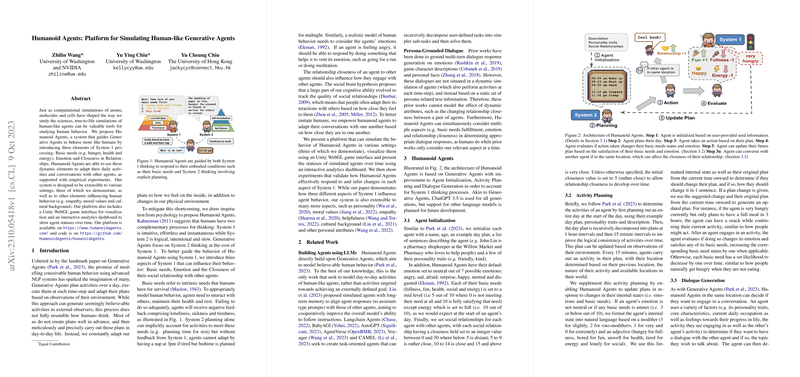

Humanoid Agents present a sophisticated platform designed to enhance the simulation of human-like generative agents by integrating key elements of System 1 processing: basic needs, emotions, and relationship closeness. This work builds on previous research, notably Generative Agents, by improving on their limitations through a focus on more realistic simulation of adaptive human behavior.

Core Contributions

The paper introduces three primary components of System 1 processing into generative agents, enabling them to operate in a manner more closely aligned with human behavior:

- Basic Needs: Incorporating fundamental human necessities such as hunger, health, and energy into agent behavior. The system allows agents to dynamically alter their activities to satisfy these needs based on their internal state feedback.

- Emotion: The inclusion of emotional factors enables agents to adjust behavior based on current feelings. Such adjustments are crucial for modeling natural human adaptability.

- Relationship Closeness: Developed to emulate the nuances in human interactions, agents adjust conversational strategies based on their relationship closeness with other agents.

Platform Implementation

The Humanoid Agents platform is extensible to various settings and incorporates a Unity WebGL interface for visualization and an interactive analytics dashboard. This setup provides a robust framework for simulating, displaying, and analyzing agent behavior over time.

Experimental Validation

Empirical experiments demonstrate that agents using this system effectively infer and respond to changes in System 1 attributes. Evaluations comparing system predictions to human annotations showed significant alignment, particularly in detecting emotions and social dynamics. However, there are complexities in accurately modeling certain aspects like fun and social needs, potentially warranting further refinement.

Implications and Future Directions

The Humanoid Agents platform, while advancing the fidelity of human-like simulations, has limitations. These include challenges with multiparty dialogues and synchronizing activities across different agents. Future iterations aim to enhance the granularity of individual differences in basic need decline rates and expand the conversational capabilities from dyadic to multiparty interactions.

The implications of this research are profound for fields that benefit from human behavior simulations, including computational social science and AI development. As AI continues to evolve, platforms like Humanoid Agents provide a foundational tool for exploring complex human-agent and agent-agent interactions within simulated environments.

Overall, Humanoid Agents mark a notable step in addressing the gap between simplified agent models and the rich, dynamic complexity of human behavior. In future developments, extensions to accommodate additional psychological and cultural factors could further increase the system’s utility and realism.