Introduction

The recently proposed synergy framework, ITRG (Iterative Retrieval-Generation), marks a significant advancement in the field of augmenting LLMs with the ability to perform tasks requiring intensive knowledge access. Unlike traditional methods, which either retrieve documents from an external knowledge base or generate documents using LLMs, ITRG operates through a collaborative approach integrating both retrieval and generation processes. This iterative interaction not only enhances task-specific knowledge utilization but also streamlines the discovery of accurate reasoning paths essential for multi-hop question answering.

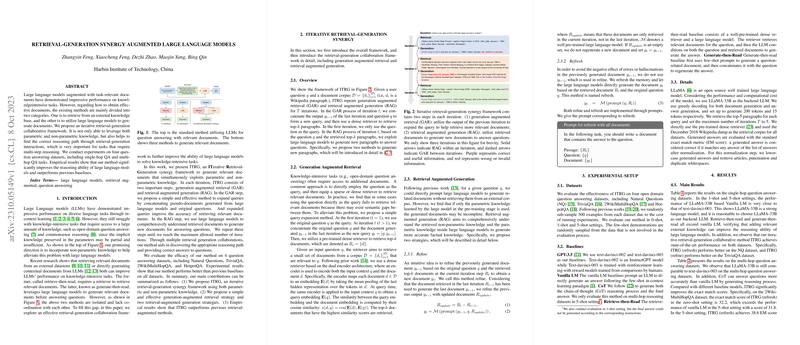

Iterative Retrieval-Generation Synergy

The framework is engineered around alternating between two core components – Generation Augmented Retrieval (GAR) and Retrieval Augmented Generation (RAG). GAR entails expanding queries by merging pseudo-documents, produced by the model itself, with the original questions. This expansion significantly refines the accuracy of document retrieval. Conversely, RAG involves generating new documents based on the comprehension of both original questions and documents procured during retrieval. The synergy between these strategies refines the generated answers through successive iterations, allowing for complex, multi-staged reasoning to be captured within the LLM's responses.

Experimental Results

On a suite of four prominent datasets spanning single and multi-hop question answering, including Natural Questions, TriviaQA, 2WikiMultiHopQA, and HotpotQA, ITRG showcases superior performance compared to existing benchmarks. This improvement is even more pronounced in iterative settings, emphasizing the framework's iterative nature. Notably, the framework outperformed competing models in various shots (0-shot, 1-shot, 5-shot) settings, with ITRG's 'refresh' strategy demonstrating remarkable scores, especially in zero-shot settings, highlighting its adeptness at utilizing LLMs for accurate knowledge synthesis without additional fine-tuning.

Conclusion

The ITRG framework emerges as a robust method that significantly elevates the reasoning capabilities of LLMs on knowledge-intensive tasks. By harnessing parametric and non-parametric knowledge, ITRG systematically outperforms previous retrieval-augmented methods. The integration of an iterative retrieval-generation loop elegantly resolves the task of accessing and refining the relevant information needed for complex question-answering. The work aptly illustrates the promise of iterative, integrated approaches for enhancing the performance of LLMs when confronted with the need for deep, multi-faceted knowledge understanding.