How Much Training Data is Memorized in Overparameterized Autoencoders? An Inverse Problem Perspective on Memorization Evaluation (2310.02897v2)

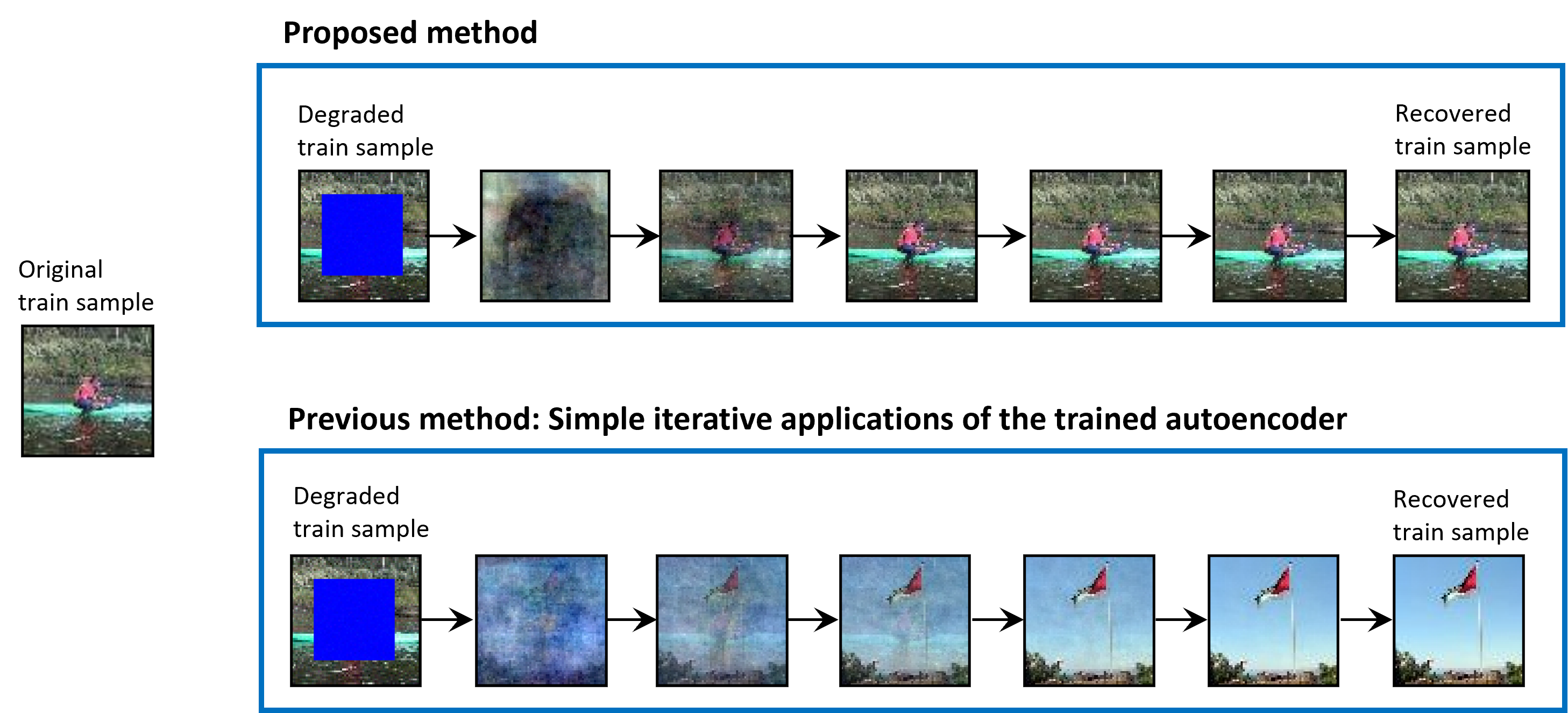

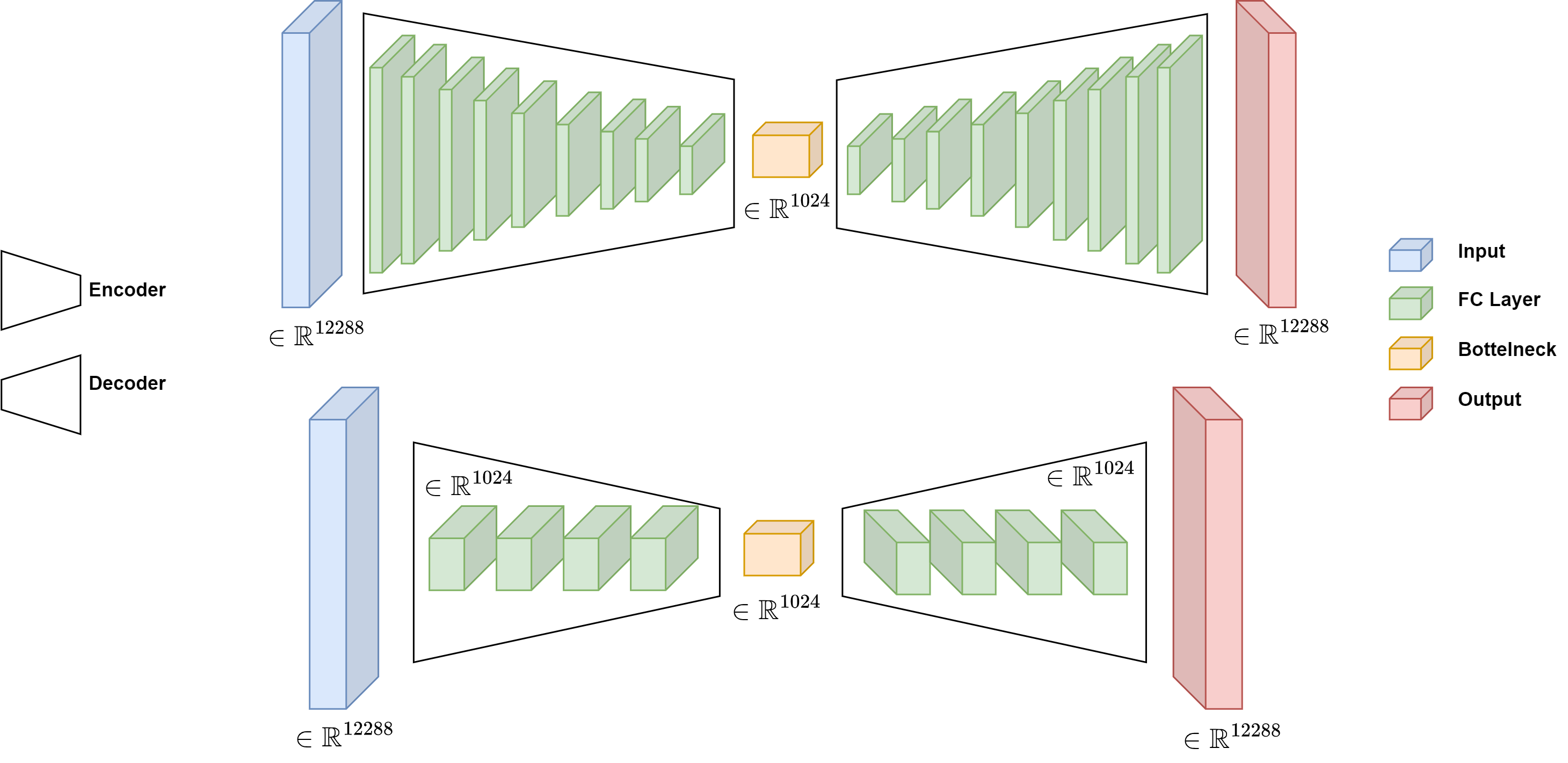

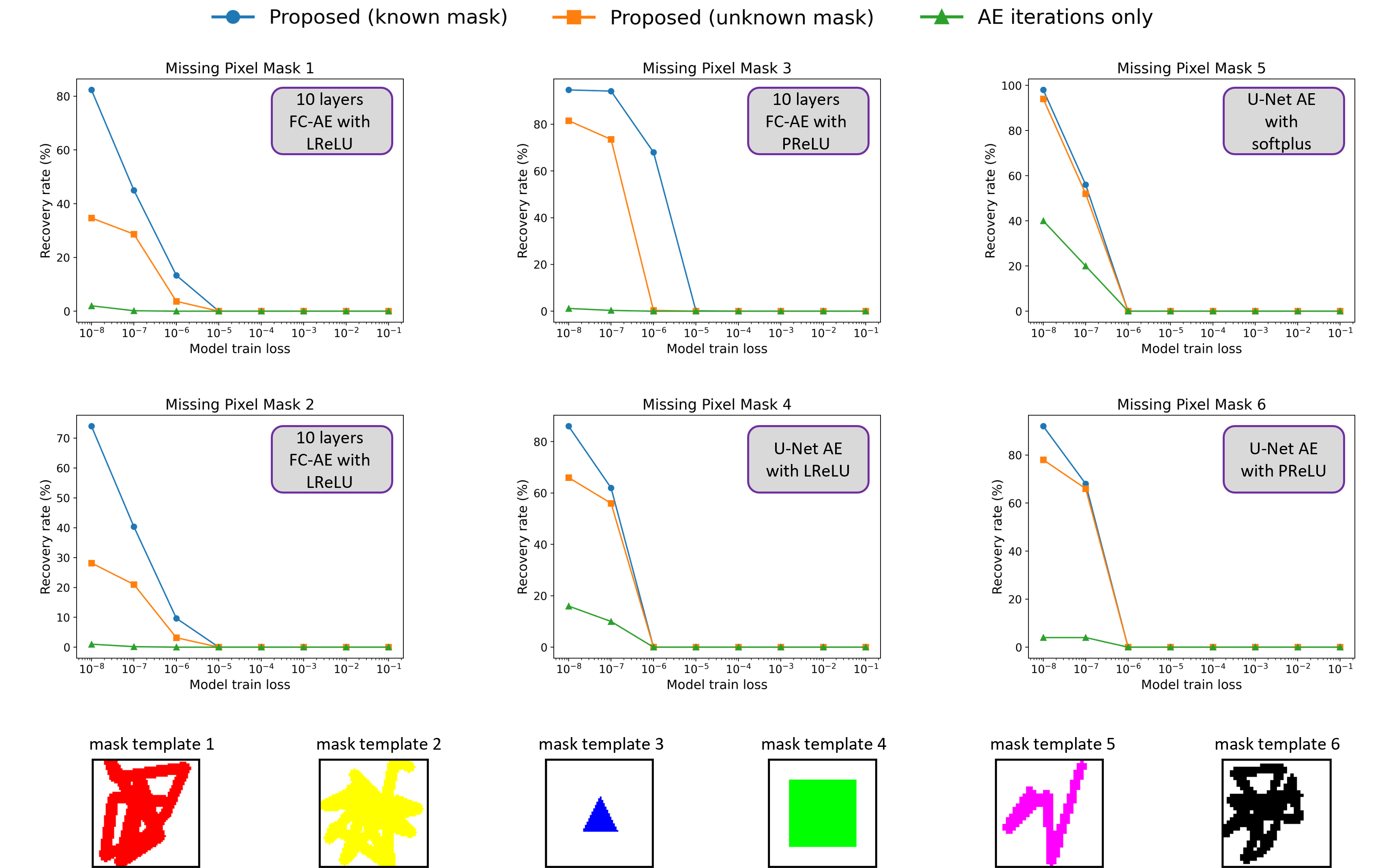

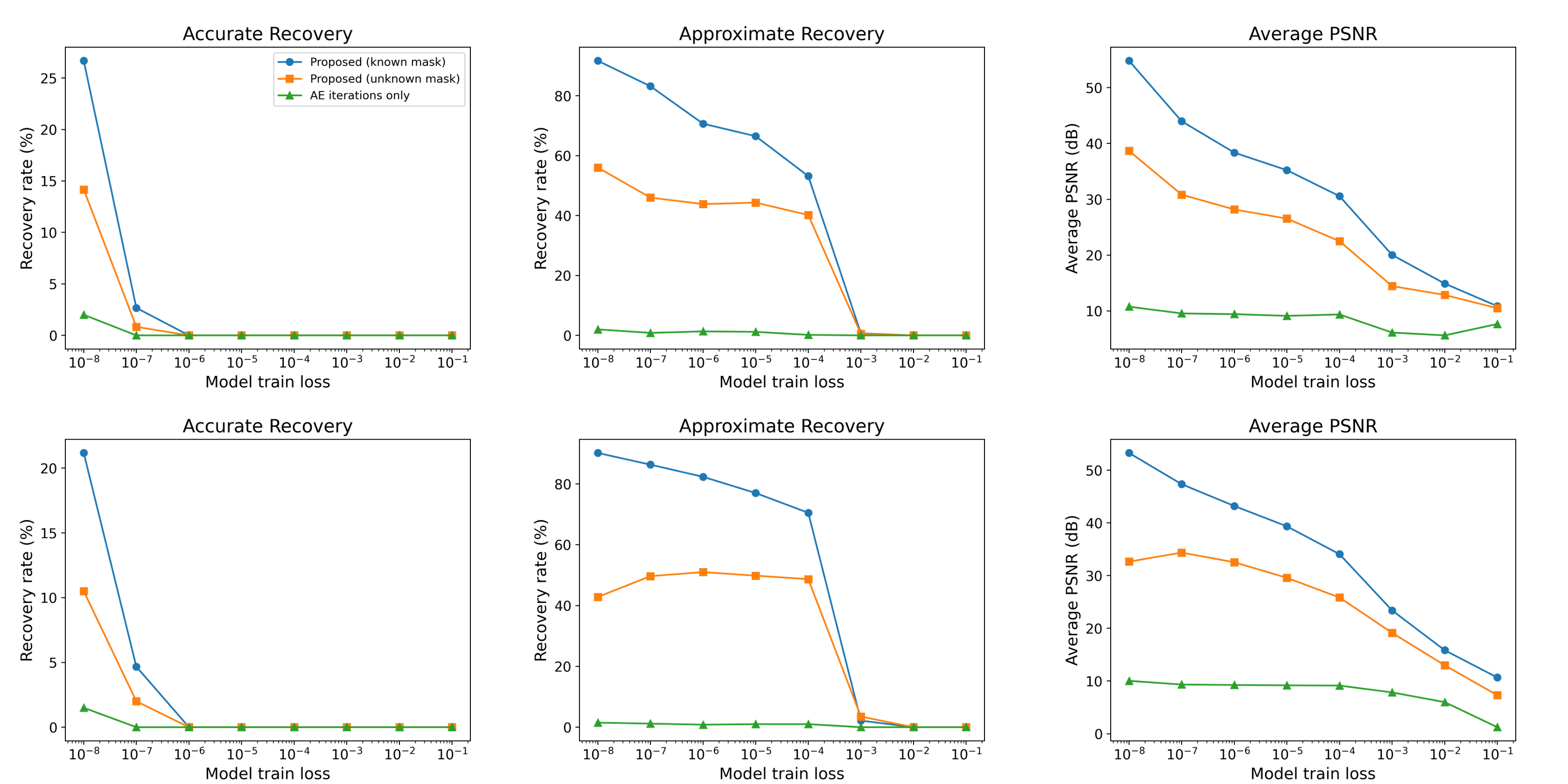

Abstract: Overparameterized autoencoder models often memorize their training data. For image data, memorization is often examined by using the trained autoencoder to recover missing regions in its training images (that were used only in their complete forms in the training). In this paper, we propose an inverse problem perspective for the study of memorization. Given a degraded training image, we define the recovery of the original training image as an inverse problem and formulate it as an optimization task. In our inverse problem, we use the trained autoencoder to implicitly define a regularizer for the particular training dataset that we aim to retrieve from. We develop the intricate optimization task into a practical method that iteratively applies the trained autoencoder and relatively simple computations that estimate and address the unknown degradation operator. We evaluate our method for blind inpainting where the goal is to recover training images from degradation of many missing pixels in an unknown pattern. We examine various deep autoencoder architectures, such as fully connected and U-Net (with various nonlinearities and at diverse train loss values), and show that our method significantly outperforms previous memorization-evaluation methods that recover training data from autoencoders. Importantly, our method greatly improves the recovery performance also in settings that were previously considered highly challenging, and even impractical, for such recovery and memorization evaluation.

- Fast image recovery using variable splitting and constrained optimization. IEEE Trans. Image Process., 19(9):2345–2356, 2010.

- Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends in Machine Learning, 3(1):1–122, 2011.

- Turning a denoiser into a super-resolver using plug and play priors. In 2016 IEEE International Conference on Image Processing (ICIP), 2016.

- Extracting training data from large language models. In 30th USENIX Security Symposium (USENIX Security 21), pp. 2633–2650, 2021.

- Membership inference attacks from first principles. In 2022 IEEE Symposium on Security and Privacy (SP), pp. 1897–1914, 2022.

- Extracting training data from diffusion models. In 32nd USENIX Security Symposium (USENIX Security 23), pp. 5253–5270, 2023.

- Plug-and-play ADMM for image restoration: Fixed-point convergence and applications. IEEE Trans. Comput. Imag., 3(1):84–98, 2017.

- Postprocessing of compressed images via sequential denoising. IEEE Transactions on Image Processing, 25(7):3044–3058, 2016.

- Subspace fitting meets regression: The effects of supervision and orthonormality constraints on double descent of generalization errors. In International Conference on Machine Learning (ICML), pp. 2366–2375, 2020.

- Convolutional proximal neural networks and plug-and-play algorithms. Linear Algebra and its Applications, 631:203–234, 2021.

- Membership inference attacks on machine learning: A survey. ACM Comput. Surv., 54(11s), sep 2022.

- Y. Jiang and C. Pehlevan. Associative memory in iterated overparameterized sigmoid autoencoders. In Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pp. 4828–4838. PMLR, 13–18 Jul 2020.

- A plug-and-play priors approach for solving nonlinear imaging inverse problems. IEEE Signal Processing Letters, 24(12):1872–1876, 2017.

- J.-J. Moreau. Proximité et dualité dans un espace hilbertien. Bulletin de la Société mathématique de France, 93:273–299, 1965.

- A. Nouri and S. A. Seyyedsalehi. Eigen value based loss function for training attractors in iterated autoencoders. Neural Networks, 2023.

- Downsampling leads to image memorization in convolutional autoencoders. 2018a.

- Memorization in overparameterized autoencoders. arXiv preprint arXiv:1810.10333, 2018b.

- Overparameterized neural networks implement associative memory. Proceedings of the National Academy of Sciences, 117(44):27162–27170, 2020.

- Poisson inverse problems by the plug-and-play scheme. Journal of Visual Communication and Image Representation, 41:96–108, 2016.

- U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pp. 234–241. Springer, 2015.

- Nonlinear total variation based noise removal algorithms. Physica D: nonlinear phenomena, 60(1-4):259–268, 1992.

- Membership inference attacks against machine learning models. In 2017 IEEE symposium on security and privacy (SP), pp. 3–18. IEEE, 2017.

- Plug-and-play priors for bright field electron tomography and sparse interpolation. IEEE Transactions on Computational Imaging, 2(4):408–423, 2016.

- Plug-and-play priors for model based reconstruction. In IEEE GlobalSIP, 2013.

- Zero-shot image restoration using denoising diffusion null-space model. International Conference on Learning Representations (ICLR), 2023.

Collections

Sign up for free to add this paper to one or more collections.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.