Evaluating Cognitive Maps and Planning in LLMs with CogEval

The paper "Evaluating Cognitive Maps and Planning in LLMs with CogEval" challenges prevailing narratives around the emergent cognitive abilities of LLMs by investigating their capacity for cognitive mapping and planning. The authors introduce a comprehensive evaluation framework, CogEval, which is methodically inspired by cognitive science, for assessing the cognitive capabilities exhibited by LLMs.

Core Contributions

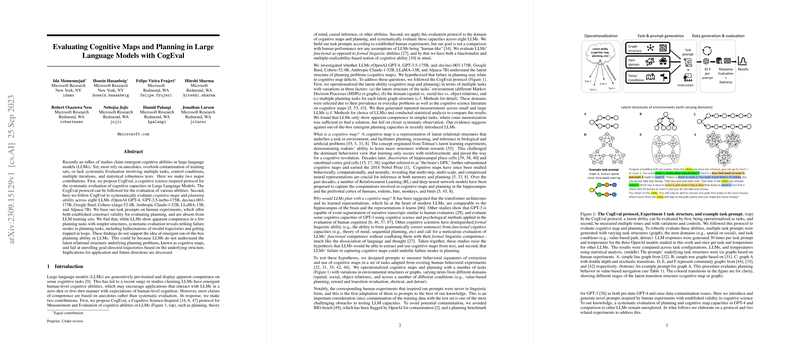

- CogEval Protocol: The paper's foremost contribution is the introduction of CogEval, a protocol designed to systematically evaluate cognitive abilities like theory of mind, causal reasoning, and planning in LLMs. CogEval emphasizes the importance of avoiding dataset contamination, employing multiple tasks and conditions, conducting numerous response generations, and ensuring robust statistical analysis. This methodological framework can be applied broadly across various cognitive constructs in LLMs beyond just cognitive maps and planning.

- Analysis Across Models: The authors employ CogEval to assess cognitive maps and planning abilities in eight LLMs, including OpenAI GPT-4 and GPT-3 variants, Google Bard, LLaMA, and others. The evaluation is conducted using task prompts derived from established human cognitive science experiments adapted into novel linguistic formats, ensuring minimal overlap with training datasets.

Experimental Findings

The systematic examination of LLMs using CogEval reveals that although some LLMs display competence in simpler planning tasks, they generally falter when faced with complex tasks requiring genuine cognitive mapping and planning. Notable failure modes include hallucinating invalid state transitions and being caught in repetitive loops, indicating a lack of emergent latent relational understanding consistent with true cognitive mapping.

Statistical Insights

Statistical analysis within this paper underscores that temperature settings in LLMs, as well as the complexity of task structures and domains, significantly impact performance. However, no substantial evidence was found to support the presence of inherent planning capabilities across any of the evaluated LLMs, even in highly sophisticated models like GPT-4.

Implications and Future Directions

This research holds significant implications for the future development and application of AI technologies. The findings caution against the presumption of innate high-level cognitive abilities in current-generation LLMs, explicitly in domains requiring planning and navigation of complex data structures. Consequently, there is a growing imperative to augment LLMs with mechanisms analogous to human executive functioning for memory and planning, such as augmented executive control systems or specialized architectural advancements.

Speculatively, future advancements may necessitate a shift towards models exhibiting more nuanced, energy-efficient architectures that mirror the specialized faculties of biological brains. Such models could potentially achieve cognitive tasks with smaller scale and resource footprints, emphasizing effective task and domain specialization.

The paper contributes to advancing the discourse on the relationship between model size and cognitive functionality, steering the focus towards methodological rigor and architectural efficacy in enhancing AI capabilities. The findings underscore the necessity for continuous research into affixed cognitive mechanisms that underpin planning and reasoning within artificial neural networks.