InternLM-XComposer: Advanced Vision-LLM for Text-Image Composition

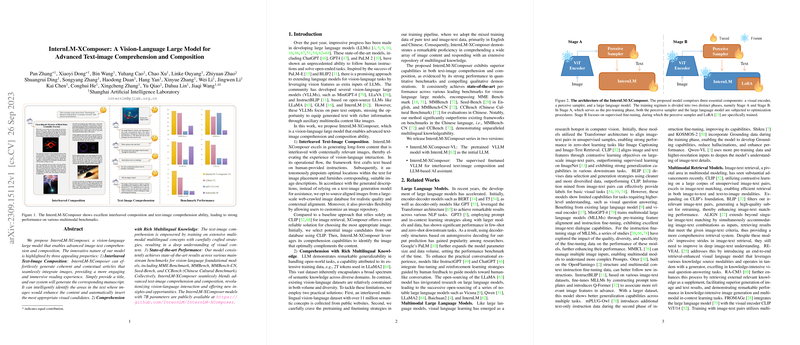

The emergence of vision-language large models (VLLMs) has been a notable advancement in the field of artificial intelligence, particularly in tasks requiring multimodal understanding and composition. InternLM-XComposer introduces a novel approach to vision-language interaction, building upon the strengths of existing large language and vision models. It notably excels in integrating images cohesively within textual content, demonstrating remarkable proficiency in both comprehension and compositional aspects.

Key Features and Methodology

InternLM-XComposer enhances interactivity in vision-language tasks through three primary innovations:

- Interleaved Text-Image Composition: The model is capable of creating content that seamlessly integrates text and images, aligning visual and textual elements to enhance comprehension and engagement. It effectively assesses textual areas where images would be beneficial and selects suitable visuals from a large-scale database. This process not only improves reader experience but is also critical in media and educational content creation.

- Multilingual Knowledge Integration: By leveraging a vast multilingual, multimodal database, InternLM-XComposer achieves a nuanced understanding of both linguistic and visual data. This broadens its applicability across different languages and cultural contexts, addressing a known limitation in existing VLLMs that often lack diverse linguistic training.

- State-of-the-Art Performance: Consistently outperforming other models across several benchmarks, InternLM-XComposer shows exceptional results in tasks such as multilingual comprehension and visual reasoning. This is particularly evident in rigorous evaluations including MME Benchmark, MMBench, and others, where the model demonstrates superior capability and adaptability.

Evaluation and Performance

InternLM-XComposer's noteworthy results arise from a meticulously designed evaluation process integrating human assessments and automated scoring via GPT4-Vision. This dual approach ensures both qualitative and quantitative robustness. The model's performance is competitive with leading solutions like GPT4-Vision and GPT3.5, especially in text-image composition and interactivity.

Quantitative benchmarks underscore its capabilities:

- MME and MMBench: The model exhibits superior understanding and reasoning abilities, handling tasks with complex visual and textual interdependencies.

- Chinese Cultural Understanding: Its performance on CCBench highlights its proficiency in cultural and linguistic nuances, a testament to its comprehensive multilingual training.

Implications and Future Directions

The practical implications of InternLM-XComposer are significant, particularly in industries focusing on content generation, multimedia educational tools, and interactive storytelling where integrated text-image synthesis is crucial. Theoretically, the model paves the way for further exploration in the seamless merging of language and vision, contributing to the development of more sophisticated AI capable of nuanced human-like understanding and expression.

As the field advances, future iterations could incorporate more dynamic data sources, enhancing real-time adaptability and potentially introducing more complex interactive elements. Additionally, further research might explore cross-domain applications, leveraging this model's capabilities in fields such as virtual reality and human-computer interaction.

In conclusion, InternLM-XComposer represents a substantial step forward in vision-language integration, offering a framework for both academic exploration and practical application in multimodal interactive systems. Its robust architecture and impressive benchmark performance establish it as a leading model in this domain.