Introduction

The field of AI has seen a dramatic shift with the advent of LLMs, especially in the context of AI agents. This transition is fueled by the remarkable capabilities of LLMs in natural language understanding, reasoning, and knowledge recall. The integration of these models with AI agents suggests a new breed of intelligent systems capable of sophisticated behaviors that were once beyond the reach of traditional rule-based methods. The paper under discussion embarks on a thorough examination of how LLMs, compared to traditional AGI methodologies, significantly bolster the design and functionality of AI agents.

Fundamental Differences

AI agents historically rely on built-in rules and algorithms tailored to specific tasks, often resulting in competent yet rigid performances. LLMs have disrupted this landscape by enabling AI agents to understand and generate language, exhibit robust generalization, and leverage a vast knowledge base. The paper explores these differences, indicating that, unlike their predecessors, LLM-based agents can flexibly adapt to different tasks without the necessity of task-specific training, exemplified by the VOYAGER agent's performance in the game Minecraft.

Core Components

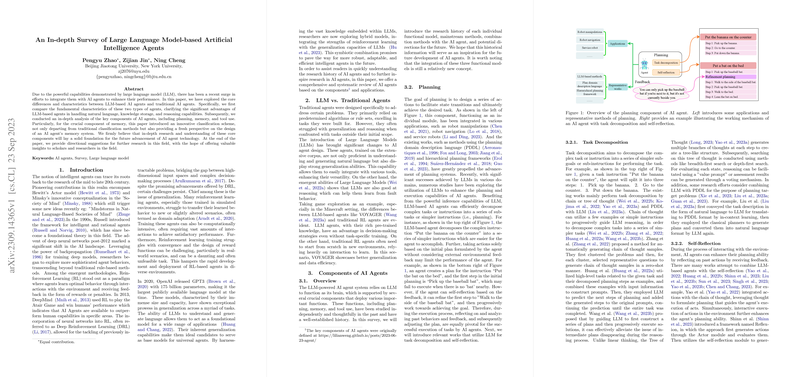

Integral components such as planning, memory, and tool use serve as the foundation of AI agent capabilities. Planning involves strategizing a sequence of actions to achieve a goal, for which LLMs have shown an improved capability through nuanced task decomposition and self-reflection. Memory has been reclassified based on LLM characteristics, into training memory (knowledge learned during pre-training), short-term memory (temporary, task-specific information), and long-term memory (information stored in external systems). The paper also emphasizes the importance of integrating these components for optimal agent performance.

Applications and Vision

AI agents have penetrated various domains, from chatbots offering both productivity tools and emotional companionship, like Pi, to game agents like Voyager with dynamic learning capabilities. Coding aid from GPT Engineer, design platforms like Diagram, and research-focused agents such as ChemCrow and Agent exhibit the diversity of LLM-based agent applications. The paper also touches upon collaborative systems where AI agents work in synergy to accomplish complex tasks. The vision for these agents is not just to perform assigned tasks but to engage in a wider range of general-purpose applications, culminating in a step closer to true artificial general intelligence.

Conclusion

In summary, this paper provides a detailed exploration of LLM-based AI agents and highlights the clear distinction from their traditional counterparts. It offers a deep dive into the mechanics underpinning these agents, the pivotal role of their core components, and a broad spectrum of applications that have emerged. The survey aims to aid readers in familiarizing themselves with current advancements and inspires the trajectory of future research in AI agent technology.