Overview of "Baichuan 2: Open Large-scale LLMs"

The paper introduces Baichuan 2, a set of open-source, multilingual LLMs with parameter counts of 7 billion and 13 billion. These models are unique due to their training on 2.6 trillion tokens, which is notably extensive compared to similar models. Baichuan 2 is designed to rival or exceed comparable open-source models across various benchmarks, while also being focused on non-English languages, specifically Chinese.

Key Contributions

Baichuan 2 significantly enriches the landscape of open-source LLMs in several critical ways:

- Extensive Training Dataset: The models were trained on a colossal dataset of 2.6 trillion tokens, emphasizing a multilingual corpus. This dataset surpasses those used by previous iterations such as Baichuan 1.

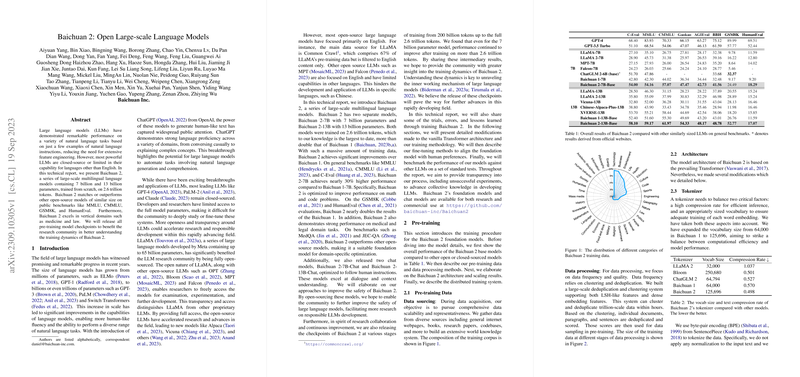

- Benchmark Performance: On benchmarks including MMLU, CMMLU, GSM8K, and HumanEval, Baichuan 2 demonstrates superior performance compared to open-source models of similar size. In particular, it shows substantial improvement in solving mathematics and coding-related tasks.

- Domain Specialization: Baichuan 2 has demonstrated strong results in specialized domains like medicine and law, making it an ideal foundation for further domain-specific optimizations.

- Open Model Release: All pre-training checkpoints are made available, facilitating deeper insights into the training dynamics of the model, which can be an invaluable resource for research and development.

Technical Modifications

Baichuan 2 incorporates several architectural enhancements and training optimizations:

- Tokenizer and Model Architecture: A larger vocabulary of 125,696 tokens was employed using BPE, which improves upon previous versions by better balancing compression rate and computational efficiency.

- Positional Embeddings and Optimizations: The model utilizes different types of positional embeddings—Rotary Positional Embedding and ALiBi. It also integrates techniques like memory-efficient attention and uses the SwiGLU activation function to enhance training robustness and performance.

- NormHead and Max-z Loss: These methodologies were implemented to stabilize training by normalizing output embeddings and mitigating the growth of logits, respectively, leading to improved inference robustness.

Safety and Alignment

Baichuan 2 incorporates a detailed alignment procedure, resulting in chat-specific models that are enhanced for dialogue comprehension and instruction-following abilities. The alignment process includes:

- Supervised and Reinforcement Learning: Human feedback was employed for initial supervised fine-tuning, while reinforcement learning further refined the responses. The paper outlines the use of reward models to optimize response generation.

- Safe Model Development: Through various safety protocols, from data filtering to the reinforcement learning stage, the model emphasizes reducing harmful outputs. Safety evaluations indicate significant improvements without compromising the helpfulness of the AI.

Implications and Future Directions

This work contributes to the ongoing trend toward open and transparent AI development, emphasizing multilingual capabilities, data efficiency, and domain specialization. The release of intermediary checkpoints is particularly valuable for ongoing research in understanding and improving training dynamics. Future developments could enhance Baichuan 2's safety mechanisms, expand its multilingual scope, and refine its performance further in specialized domains.

Overall, Baichuan 2 represents a substantial progression for open-source LLMs, broadening the accessibility and applicability of AI technologies beyond predominantly English-centric models.