Survey on Hallucination in LLMs

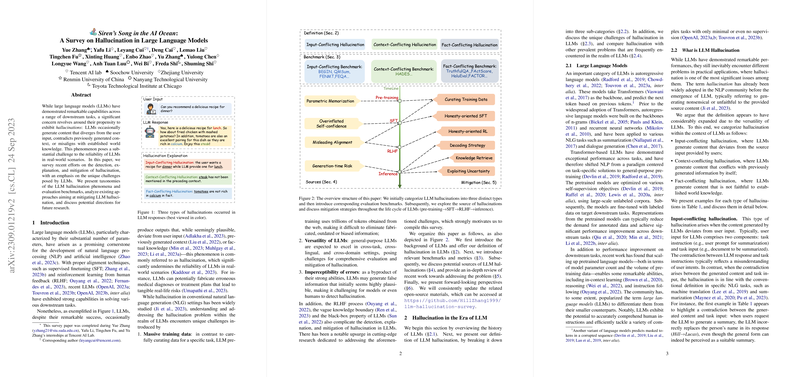

The paper "Siren's Song in the AI Ocean: A Survey on Hallucination in LLMs" provides a comprehensive overview of the phenomena of hallucinations in LLMs. Hallucination, as defined here, refers to the generation of responses by LLMs that deviate from user input, contradict prior generated content, or conflict with established world knowledge. The survey addresses the challenge of hallucinations, which significantly impact the reliability of LLMs in practice.

Key Contributions

The authors systematically categorize hallucinations in LLMs into three types:

- Input-conflicting Hallucination: The responses from LLMs deviate from the user's input or given instructions, similar to inconsistencies observed in task-specific models like machine translation and summarization.

- Context-conflicting Hallucination: This occurs when LLMs generate content that contrasts with previously produced output, highlighting issues with maintaining contextual consistency.

- Fact-conflicting Hallucination: The most emphasized among the three, arises when LLMs produce information not aligned with factual knowledge.

Evaluation Frameworks and Methodologies

The paper reviews several benchmarks and metrics used to evaluate hallucination. Notably, evaluation varies between two primary methodologies: the generation approach, which assesses the quality of LLM-generated text, and the discrimination approach, which evaluates the model’s capability to distinguish between factual and non-factual statements. However, obtaining reliable human evaluation remains a significant part of assessing hallucination due to the nuanced nature of the phenomena that existing automated metrics struggle to capture accurately.

Sources and Mitigation Strategies

Sources of Hallucinations

The survey identifies several sources of hallucinations emanating throughout the lifecycle of LLMs:

- Training Data: Massive and often noisy corpora used during pre-training can embed outdated or erroneous knowledge.

- Model Architecture and Processes: Overconfidence issues, where LLMs might overestimate their knowledge, leading to unfaithful content generation.

- Decoding Processes: Strategies such as random sampling in text generation might introduce hallucinations due to extensive diversity-seeking behavior.

Mitigation During Lifecycle Phases

Various strategies are proposed to mitigate hallucinations at different stages:

- Pre-training Phase: Data curation, both automatic and manual, aims to ensure the integrity of pre-training corpuses.

- Supervised Fine-Tuning (SFT): Care in constructing fine-tuning datasets by excluding samples that might promote hallucinations.

- Inference Time Techniques: Employ decoding strategies to align generation more closely with factually accurate and contextually coherent content. Additionally, integrating retrieval-augmented approaches where models access external factual data sources can help substantiate generated responses and rectify inaccuracies.

Future Directions and Challenges

While significant progress has been achieved, the paper acknowledges that challenges remain in evaluating multi-lingual and multi-modal hallucinations, establishing validation coherence between tasks, and adapting strategies for real-time data injection. Moreover, there's an emphasized need for developing more robust and standardized benchmarks to refine the efficacy of hallucination detection and mitigation approaches.

This paper calls for ongoing and future research efforts targeting these unresolved areas and others, such as understanding utility trade-offs between models’ helpfulness and truthfulness during system tuning processes like RLHF.

In conclusion, addressing hallucination in LLMs is fundamental to enhancing decision-making reliability across various applications. The survey lays the groundwork for more refined approaches and solutions across both research and industry contexts. The authors underscore the necessity of continued advancements in aligning LLM outputs with factual human knowledge, underlining a key facet of improving AI trustworthiness and application in real-world scenarios.