Overview of Neural Network Pruning: Techniques, Taxonomy, and Applications

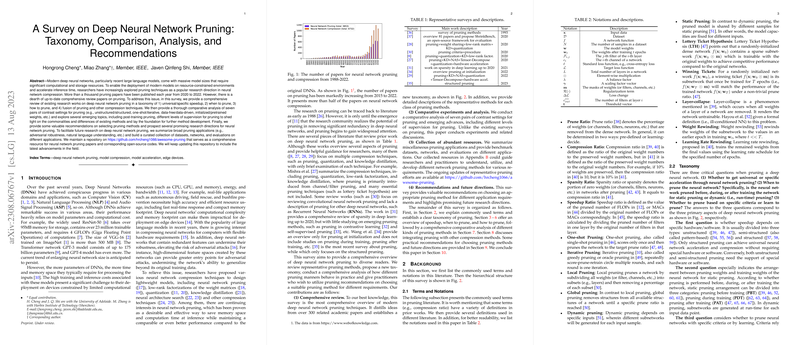

In the domain of deep learning, efficient model deployment in resource-constrained environments is a significant research focus. The paper "A Survey on Deep Neural Network Pruning: Taxonomy, Comparison, Analysis, and Recommendations" provides a detailed exploration of deep neural network (DNN) pruning, a method extensively researched for model compression and acceleration. This survey aims to fill the gap of comprehensive recent review papers on pruning techniques, offering a new taxonomy, comparative analyses, and future research directions.

Taxonomy and Techniques

The paper categorizes pruning into three main types based on the granularity of speedup: unstructured, semi-structured, and structured pruning. Unstructured pruning removes individual weights and is known for its high prune ratios with minimal accuracy loss. However, it requires specialized hardware and software support due to its irregularity. Structured pruning, which operates at the level of filters or channels, provides a universal speedup and can be readily implemented on standard hardware. Semi-structured pruning, or pattern-based pruning, strikes a balance between accuracy and regularity by removing weights in predefined patterns.

The processes for pruning are divided into three pipelines: Pruning Before Training (PBT), Pruning During Training (PDT), and Pruning After Training (PAT). PBT techniques, such as SNIP and GraSP, evaluate the importance of network components at initialization, avoiding potential training overhead. PDT methods, like Sparsity Regularization and Dynamic Sparse Training, perform pruning and training concurrently, adjusting sparse architectures iteratively. PAT is the most common approach, employing the Pretrain-Prune-Retrain cycle to exploit a pretrained network's performance for effective subnetwork extraction.

Criteria and Learning-Based Pruning

Pruning strategies utilize various criteria to assess the significance of network weights, including magnitude, norm, saliency, and loss change. Additionally, the paper discusses learning-based pruning, where neural networks are pruned via sparsity-inducing regularization, meta-learning, and reinforcement learning techniques. Such methods aim to optimize performance and prune ratios simultaneously.

Comparative Analysis and Recommendations

The paper offers a comparative paper on different pruning paradigms, highlighting their respective strengths and challenges. The paper examines scenarios like unstructured versus structured pruning, one-shot versus iterative pruning, and static versus dynamic pruning, providing insights into their performance and applicability. Notably, the survey also explores the transferability of pruned networks across datasets and architectures, emphasizing the practicality of "winning tickets" in diverse contexts.

For practitioners, the survey presents guiding principles for selecting appropriate pruning methods based on computational resource availability, desired model size reduction, and speedup requirements.

Applications and Future Directions

Pruning has broad applications across multiple domains, notably in computer vision for tasks like image classification, object detection, and image style translation, as well as in natural language processing and audio signal processing. The paper underscores the need for standardized benchmarks and performance metrics to better evaluate and compare pruning methods across varying tasks and datasets.

Future research directions highlighted in the survey include the further integration of pruning with other compression techniques (such as quantization and neural architecture search), exploration of pruning in self-supervised and unsupervised settings, and addressing the theoretical underpinnings of pruning efficacy. Moreover, improving the interpretability and robustness of pruned models remains a critical challenge that warrants rigorous investigation.

In conclusion, the survey by Cheng et al. compiles an extensive overview of neural network pruning, offering a valuable resource for the ongoing development of efficient deep learning models in diverse applications. It builds a foundation for future work in optimizing neural networks for deployment in environments with computational constraints.