Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs

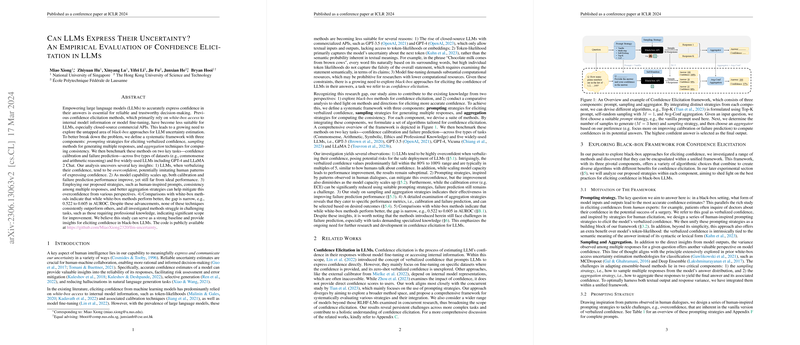

The paper investigates the capability of LLMs to express their uncertainty in responses, aiming to improve the reliability of AI-driven decision-making. Traditional methods for eliciting confidence, relying on white-box access to model internals or model fine-tuning, are increasingly impractical, especially for black-box, closed-source models like GPT-4 and LLaMA 2 Chat. This paper explores a black-box framework composed of three components: prompting strategies for eliciting verbalized confidence, sampling methods for generating multiple responses, and aggregation techniques for leveraging consistency among those responses.

Key Findings

- Overconfidence in LLMs: The empirical analysis shows that LLMs are prone to overconfident verbalizations of their confidence, often reflecting human-like patterns, such as overestimation of certainty.

- Scalability with Model Capability: As the maturity of the LLM improves, so do both calibration and failure prediction performances, though far from what might be considered ideal.

- Mitigating Overconfidence: Utilizing strategies like human-inspired prompts, assessing consistency among multiple generated responses, and advanced aggregation techniques can temper overconfidence and improve confidence calibration in tasks like commonsense and arithmetic reasoning.

- Comparison to White-Box Methods: Although white-box methods offer more accurate confidence calibration than black-box ones, the performance gap in AUROC between them is notably narrow, suggesting that further development in black-box approaches is warranted.

- Challenges in Specific Tasks: The paper reveals that all existing techniques face significant challenges when tasked with problems necessitating specialized knowledge, such as professional law or ethics, suggesting ample room for advancement.

Implications and Future Directions

The paper serves as a baseline for subsequent investigations into black-box approaches to confidence elicitation. Practically, these findings could inform developers on integrating more reliable decision-making capabilities into AI applications, especially where access to internal model parameters is limited. Theoretically, the insights derived suggest potential pathways for improving confidence elicitation, challenging researchers to potentially blend white-box and black-box methods to strike a balance between performance and feasibility.

Looking ahead, future advancements might involve hybrid methods that incorporate limited white-box data (like output logits) to enhance black-box models or delve into novel neural architectures that inherently facilitate better confidence estimations. Additionally, optimizing the trade-off between computational efficiency and confidence accuracy in multi-query sampling strategies and refining aggregation techniques to draw more semantic correlations are promising areas of pursuit.

Overall, this paper illuminates the intricacies of constructing effective confidence elicitation systems in LLMs, furthering the collective understanding necessary to build more robust, trustworthy AI systems.