LLM Confidence Estimation via Black-Box Access

The paper, authored by Tejaswini Pedapati, Amit Dhurandhar, Soumya Ghosh, Soham Dan, and Prasanna Sattigeri, presents a significant contribution to the field of LLMs. The research focuses on estimating the confidence of LLM responses with only black-box access, addressing a pertinent problem in AI reliability and trustworthiness.

Background and Problem Statement

Confidence estimation in the outputs of LLMs is crucial for several reasons, including trust evaluation, benchmarking, and hallucination mitigation. Unlike traditional tasks where outputs are exact and can be directly compared with ground truths, LLMs often produce varied, semantically equivalent responses. This variability necessitates a sophisticated approach to assess the model's confidence.

Here, the problem is framed formally: given an input prompt and an LLM , the goal is to estimate the probability that the output meets or exceeds a semantic similarity threshold with the expected response . Prior approaches have explored this issue, but most rely on strategies that require extensive internal model access or computational resources, limiting their practicality.

Methodology

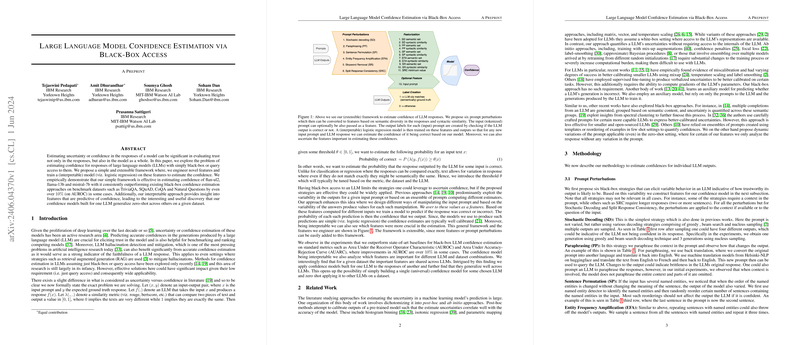

The authors propose a simple but powerful framework utilizing logistic regression to estimate LLM response confidence based on engineered features obtained through various prompt perturbations. The novel aspect lies in the use of interpretability to glean insights from the features, enhancing the robustness of the confidence estimates. Key components of the methodology include:

- Prompt Perturbations:

- Stochastic Decoding (SD): Multiple responses for a single prompt using different decoding strategies.

- Paraphrasing (PP): Back-translation-based paraphrasing of the context in the prompt.

- Sentence Permutation (SP): Reordering sentences containing named entities to create alternative prompts.

- Entity Frequency Amplification (EFA): Repeating sentences with named entities within the context.

- Stopword Removal (SR): Removing stopwords from the context while maintaining the semantic content.

- Split Response Consistency (SRC): Contradiction detection within split responses using an NLI model.

- Featurization:

- Semantic Set: Number of semantically equivalent sets formed from the responses.

- Syntactic Similarity: Average syntactic similarity between responses.

- SRC Minimum Value: Highest contradiction probability in the split responses.

- Training and Validation:

- Logistic regression is employed for its interpretability and efficiency. The model is trained on features derived from the perturbations and the corresponding correctness labels determined by the Rouge score against ground truth responses.

Experimental Results

The framework was evaluated across three prominent LLMs—Mistral-7B-Instruct-v0.2, llama-2-13b chat, and flan-ul2—on four datasets: CoQA, SQuAD, TriviaQA, and Natural Questions (NQ).

Key Findings:

- AUROC and AUARC Performance: The framework consistently outperformed state-of-the-art baselines, with improvements in AUROC by over 10% in several cases and substantial enhancements in AUARC as well.

- Feature Importance: Analysis revealed that features like SD syntactic similarity and SP syntactic similarity were critical across various LLM-dataset combinations, indicating the robustness of these features in estimating confidence.

- Model Transferability: Logistic confidence models trained for one LLM showed effective performance when applied to other LLMs on the same dataset, suggesting the potential for a universal confidence model applicable across multiple LLMs.

Implications and Future Directions

The implications of this research are both practical and theoretical:

- Practical Impact: The proposed framework provides a scalable and interpretable solution for black-box LLM confidence estimation without requiring internal model access or modifications.

- Theoretical Insights: The discovery that certain features are universally important across different LLMs and datasets underscores the potential for developing generalizable confidence models.

Future work could extend this approach to more varied LLMs and datasets, including non-English languages, to further validate the framework's robustness. Additionally, incorporating more sophisticated perturbations and exploring deeper featurization strategies might enhance the confidence estimation further.

Conclusion

This paper by Pedapati et al. introduces an effective and interpretable framework for LLM confidence estimation using black-box access. By leveraging engineered features from prompt perturbations and employing logistic regression, the authors deliver a method that not only outperforms existing approaches but also offers insights into the generalizability of confidence features across different LLMs. This work opens avenues for more reliable and trustworthy AI applications, underscoring the importance of confidence estimation in modern AI systems.