Augmenting LLMs with Symbolic Memory: Insights from ChatDB

Introduction

The paper "ChatDB: Augmenting LLMs with Databases as Their Symbolic Memory" presents a novel approach to enhance the reasoning capabilities of LLMs by integrating symbolic memory through databases. This method aims to address the inherent limitations in conventional neural memory mechanisms, which often fail to support complex reasoning due to their approximate and error-prone nature.

Methodology

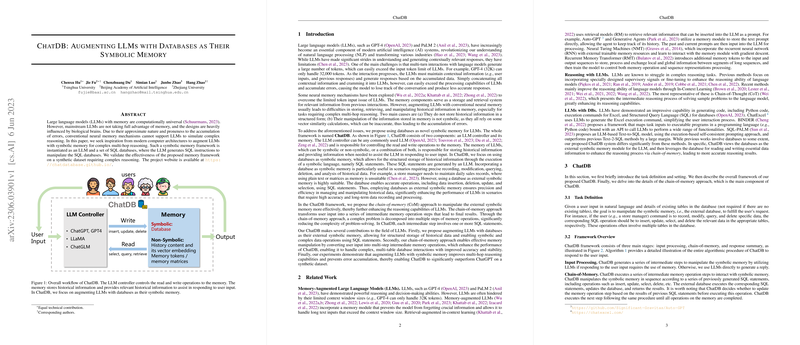

The ChatDB framework leverages modern computer architectures, utilizing a symbolic memory system instantiated via LLMs and SQL databases. The LLM generates SQL instructions to manipulate these databases, allowing for a structured and precise storage of historical information. This architecture enables effective read and write operations, ensuring accurate data management across multi-hop reasoning tasks.

ChatDB comprises three primary stages: input processing, chain-of-memory, and response summarization. The chain-of-memory approach, in particular, stands out as it decomposes complex reasoning into discrete, manageable memory operation steps. This granular handling of tasks reduces complexity and enhances the model’s multi-hop reasoning capabilities.

Experimental Evaluation

Experiments were conducted using a synthetic dataset simulating the management of a fruit shop. The dataset included records of basic operations such as purchasing, selling, and returning goods, all requiring multi-hop reasoning for effective management.

Comparison with ChatGPT demonstrated that ChatDB significantly improves accuracy, particularly in complex reasoning tasks. ChatDB correctly answered 82% of questions posed, a notable increase compared to ChatGPT’s 22% accuracy. This performance underscores the advantages of symbolic memory in reducing error accumulation and enhancing reasoning precision.

Implications

The integration of symbolic memory into LLMs, as demonstrated by ChatDB, presents several implications:

- Enhanced Reasoning: By employing SQL for memory operations, ChatDB supports precise, step-by-step problem-solving, crucial for tasks demanding high accuracy and long-term data management.

- Extended Context: Symbolic memory allows LLMs to maintain a structured and scalable repository of historical data, potentially overcoming limitations of traditional neural memory architectures.

- Practical Applications: Industries relying on data accuracy and complex reasoning, such as finance, healthcare, and logistics, may benefit substantially from the methodologies outlined in ChatDB.

Future Directions

The success of ChatDB suggests several future avenues for AI research:

- Integration with Other Modalities: Extending the symbolic memory framework to incorporate other data modalities, such as images or time-series data, could enhance the versatility of LLMs in various domains.

- Scalability and Efficiency: Further exploration into optimizing the computational efficiency of SQL operations within symbolic memory could improve the scalability of ChatDB in real-world applications.

- Robustness and Generalization: Expanding the chain-of-memory approach to support more diverse types of reasoning tasks could provide insights into developing more robust and generalizable AI systems.

In conclusion, ChatDB provides a compelling case for augmenting LLMs with symbolic memory via databases, paving the way for more advanced AI systems capable of intricate reasoning and data management.