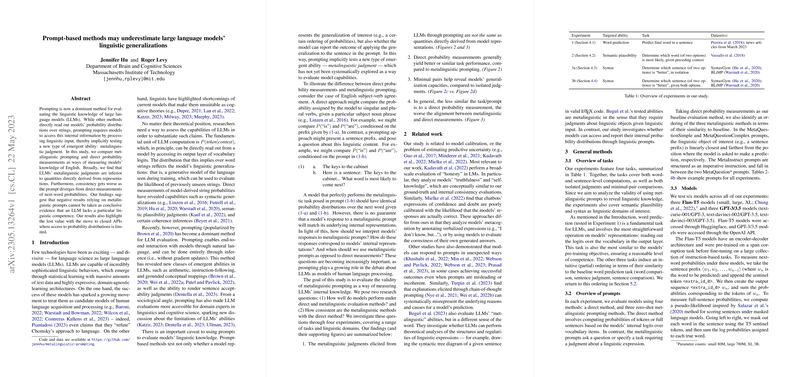

Prompting is Not a Substitute for Probability Measurements in LLMs

The paper "Prompting is not a substitute for probability measurements in LLMs" by Jennifer Hu and Roger Levy presents a rigorous comparison of two prevalent methodologies for assessing the linguistic knowledge of LLMs: metalinguistic prompting and direct probability measurements. This paper offers significant insights into the reliability and validity of these methodologies, particularly in the context of interpreting LLMs' internal knowledge.

Core Findings

The research evaluates the efficacy of metalinguistic judgments against the backdrop of direct probability measurements derived from LLM representations. The authors identify several critical findings:

- Disparity Between Judgment Methods: The paper reveals that metalinguistic judgments elicited via prompting methods are distinct from the direct probability measurements. This divergence indicates metalinguistic judgments may not reliably represent the internal linguistic generalizations held by LLMs.

- Superiority of Direct Methods: Generally, direct probability measurements outperform metalinguistic prompts in assessing model capabilities across various linguistic tasks. This result underscores the limitations of metalinguistic approaches in accurately capturing models' linguistic competencies.

- Utility of Minimal Pairs: The research illustrates that minimal-pair comparisons enhance the ability to reveal models' linguistic generalization capabilities compared to isolated judgments, providing a more nuanced understanding of model behavior.

- Implications of Methodological Choice: The paper highlights the consequential nature of selecting the appropriate evaluation methodology, particularly when interpreting negative results based on metalinguistic prompts. The findings suggest these results may not conclusively reflect a lack of linguistic generalization.

Implications

The implications of this paper are multifaceted, bearing relevance for both theoretical inquiries into LLM capabilities and practical applications involving LLM evaluations. The distinction between competence (model's probability distributions) and performance (behavioral responses to prompts) in LLMs offers a useful framework for understanding model behavior. This aligns with broader discussions in cognitive science regarding the separation of knowledge and task performance.

Practical Considerations

Practically, this research underscores the value of direct access to LLMs' probability distributions, emphasizing the limitations of closed APIs that restrict such access. This limitation poses significant challenges for research requiring in-depth analysis of model-generated probabilities and their use in tasks like Bayesian inference or multiple-choice evaluations.

Future Directions

The paper's insights pave the way for future investigations into the development of open-source models granting comprehensive access to token probabilities. Further exploration into diverse language settings and additional prompting strategies could enrich the understanding of metalinguistic and direct measurements in evaluating LLMs.

In conclusion, the paper by Hu and Levy critically examines the role of prompting and direct measurements within the paradigm of LLM assessment, offering invaluable guidance on methodological preferences that best capture a model's linguistic abilities. This research invites a reevaluation of prevalent practices in LLM evaluation, advocating for a nuanced consideration of methodological implications and model transparency.