GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints

Introduction

The paper "GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints" addresses two significant limitations associated with multi-head attention (MHA) and multi-query attention (MQA) mechanisms in transformer-based models. The paper aims to mitigate the computational inefficiencies stemming from the memory bandwidth overhead of MHA and the quality degradation issues observed with MQA. The researchers propose a cost-efficient uptraining method to convert existing multi-head models to employ MQA, and they introduce a novel grouped-query attention (GQA) mechanism that balances computational efficiency and model quality.

Methodology

Uptraining to Multi-Query Attention

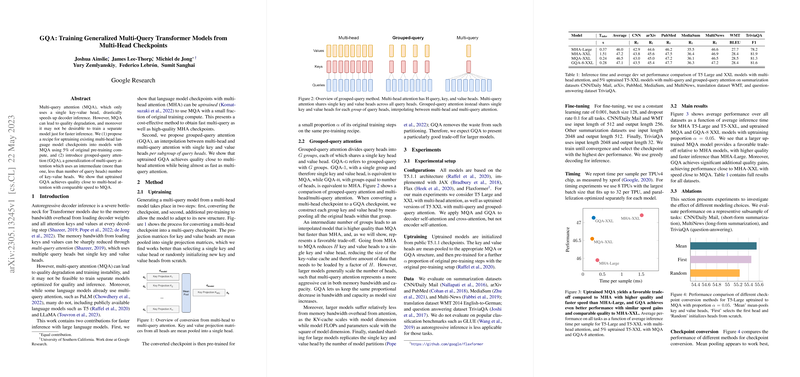

The paper introduces a two-step uptraining approach to convert multi-head models into multi-query models. Initially, the MHA checkpoints are converted by mean-pooling the projection matrices for key and value heads into single matrices. This conversion is followed by a brief additional pre-training phase that constitutes merely 5% of the original pre-training compute, enabling the model to adapt to its new architecture. This method provides a pragmatic solution to achieve faster inference without the need for training separate models optimized solely for efficiency.

Grouped-Query Attention

The proposed GQA mechanism is an intermediate form between MHA and MQA. It partitions query heads into multiple groups, each sharing a single key and value head. By adjusting the number of groups, GQA interpolates between the high-quality, high-overhead MHA and the low-quality, low-overhead MQA. This flexibility allows for achieving near-MHA quality with inferencing efficiency close to MQA.

Experiments and Results

Experimental Setup

The models are evaluated using the T5.1.1 architecture across various datasets, including CNN/Daily Mail, arXiv, PubMed, MediaSum, Multi-News, WMT 2014 English-to-German, and TriviaQA. The uptrained models initialized from T5 checkpoints were tested with a proportion of 5% additional pre-training compute. Various ablation studies were conducted to assess the impact of different modeling choices.

Performance

Table \ref{table:headline_results} and Figure \ref{fig:perf_vs_time} in the paper report significant improvements in inference speed with minimal quality degradation for uptrained MQA models. Specifically, the GQA mechanism demonstrates quality metrics approaching that of MHA while maintaining the speed advantages of MQA. For instance, GQA with 8 groups (GQA-8-XXL) achieved an average performance close to MHA-XXL but with substantially reduced inference time.

Implications and Future Work

The proposed uptraining and GQA methods have both practical and theoretical implications. Practically, they offer a scalable, cost-effective approach for enhancing the efficiency of LLMs without sacrificing quality. Theoretically, they highlight the potential of intermediate attention mechanisms to balance the trade-offs between model quality and computational performance.

Future research could extend this paper by investigating GQA's applicability to decoder-only models, which are becoming increasingly popular. Additionally, the stability issues observed with MQA during fine-tuning suggest a need for further analysis and potential methodological improvements.

Conclusion

This paper presents a comprehensive solution for optimizing transformer model inference by leveraging uptraining and proposing the novel grouped-query attention mechanism. The findings indicate that uptraining multi-head models to MQA and implementing GQA can offer substantial performance gains without significant model quality degradation, thus contributing to more efficient deployment of large-scale transformer models.

Limitations

Several limitations are acknowledged in the paper. The reliance on Rouge scores for evaluating summarization tasks presents inherent evaluation challenges. Additionally, the uptraining approach was not compared against training comparable models from scratch, and the experiments were limited to encoder-decoder models. Future studies should explore these aspects to validate and potentially enhance the presented methods.

Acknowledgements

The researchers express gratitude to colleagues at Google Research for their insightful discussions and support.

Overall, this paper provides a thoughtful exploration of enhancing transformer model efficiency through innovative attention mechanisms, demonstrating a promising direction for future advancements in AI model optimization.