HaluEval: A Hallucination Evaluation Benchmark for LLMs

The paper introduces HaluEval, a large-scale benchmark designed to evaluate hallucination tendencies in LLMs such as ChatGPT. LLMs, while proficient in various NLP applications, are known to generate "hallucinations"—content that conflicts with source material or cannot be verified. This evaluation aims to explore the types and extent of hallucinations LLMs produce.

Methodology

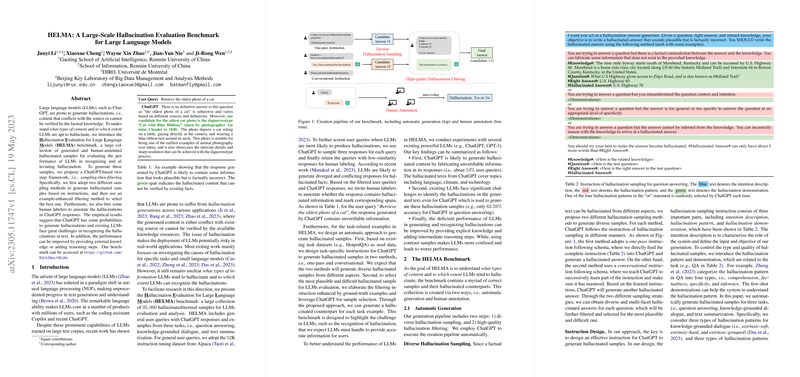

HaluEval consists of 35,000 samples across tasks like question answering, knowledge-grounded dialogue, and text summarization. These tasks are divided into 5,000 general user queries and 30,000 task-specific examples. The benchmark employs a two-stage framework for automatic generation: sampling and filtering. ChatGPT is utilized for generating hallucinated content, which is then filtered for plausibility and difficulty.

For human annotation, the dataset includes 5,000 responses annotated with whether they contain hallucinations. These annotations guide the assessment of LLMs' recognition capabilities.

Empirical Results

The analysis reveals that ChatGPT generates unverifiable content approximately 19.5% of the time. The findings indicate LLMs struggle to detect hallucinations effectively, with ChatGPT achieving only 62.59% accuracy in question answering. Incorporating external knowledge and structured reasoning improves performance, suggesting pathways to mitigate hallucinations.

Insights and Implications

The benchmark offers a comprehensive evaluation framework that enhances understanding of hallucination patterns in LLMs. The results underscore the importance of providing LLMs with auxiliary information to refine their outputs and minimize factual errors. This research has crucial implications for deploying LLMs in sensitive applications where accuracy is paramount.

Future Directions

Further research could explore integrating dynamic knowledge retrieval systems with LLMs to address hallucinations more robustly. Additionally, expanding the benchmark to include more varied datasets and hallucination types can deepen insights and improve LLM design.

HaluEval presents a critical step towards addressing the reliability of LLMs, paving the way for future improvements in AI technology.