Benchmarking Hallucinations in Chinese LLMs with UHGEval

The paper presents an in-depth exploration of LLMs’ (LLMs) tendency to produce hallucinated text, thereby limiting their reliability in professional applications. Addressing a critical gap in the assessment of hallucinatory phenomena, the authors introduce Eval, an Unconstrained Hallucination Generation Evaluation benchmark that specifically targets Chinese LLMs’ outputs within a news context.

Overview and Methodological Contributions

The paper critiques existing benchmarks that utilize constrained generation techniques due to cost and time constraints, arguing that these methods fall short of reflecting real-world applications where generation is typically unrestricted. Such benchmarks often employ induced hallucination or alter legitimate texts to fabricate hallucinations, which may not accurately mimic genuine creative or generative errors.

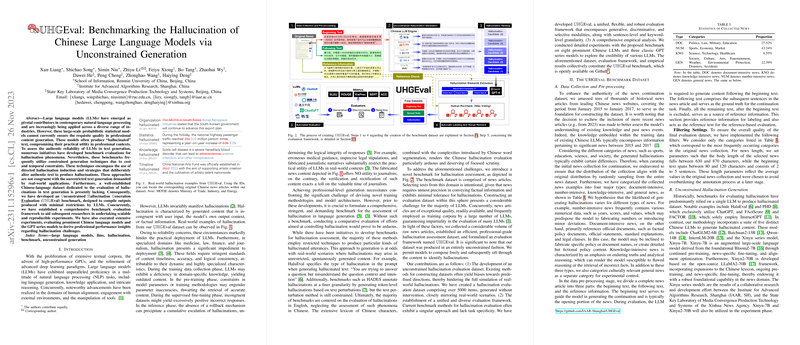

A significant contribution of this work is the introduction of a comprehensive Chinese-language dataset that captures hallucinations in an unconstrained manner. The dataset comprises over 5,000 annotated items derived from historical news articles, categorized into document-intensive, number-intensive, knowledge-intensive, and general news. This categorization recognizes the different ways hallucinations might manifest across various content types.

Dataset Construction

The dataset construction includes a two-stage annotation process: a hallucination ranking followed by automatic labeling and human rechecking. The hallucination ranking algorithm prioritizes fluency and the likelihood of hallucination, selecting text candidates that strike a balance between coherence and hallucinatory potential. The authors propose the keyword precision (kwPrec) metric as a superior alternative to traditional BLEU and ROUGE scoring methods, arguing it better identifies essential fact-related inaccuracies.

The authors ensured the inclusivity of multiple LLMs in dataset creation, incorporating five Chinese models to generate hallucinations, thus providing greater diversity and reducing the risk of model-specific biases.

Evaluation Framework

The proposed evaluation framework includes discriminative, selective, and generative evaluations. Discriminative evaluation demands LLMs determine the presence of hallucinations; selective evaluation requires discerning between text options, with and without hallucinations; and generative evaluation involves analyzing LLM-generated continuations for hallucinated content using reference-based techniques.

In the empirical analysis, the authors tested eight prominent Chinese LLMs and three GPT series models, offering significant insights into their hallucination dynamics. Notably, they reported that most models performed better with number-intensive and general news, echoing the complexity of numeric data and societal narratives in generating reliable outputs.

Discussion and Implication

The findings suggest that Chinese LLMs, especially domain-specific ones like Xinyu2-70B, excel in selective evaluation, indicative of their robustness in narrower contexts such as news domains. Generative evaluation posed challenges, revealing the inherent difficulty for LLMs in producing factually correct and coherent continuations without hallucination.

By aligning evaluative tasks with real-world scenarios, this research underscores the need to improve LLM training methods, potentially guiding enhancements in knowledge integration and retrieval processes. It points to the profound implication that leveraging specific domain knowledge significantly improves the factual accuracy of LLM outputs.

Conclusion and Future Directions

This paper's contributions in constructing and evaluating Chinese LLMs through Eval establish a rigorous standard for assessing hallucinated content generation without constraints, paving the way for more reliable LLM applications in professional fields such as journalism and academia. Future research will likely focus on expanding this benchmark across other languages and domains, enhancing LLMs' ability to generate reliable, contextually appropriate content across various applications.