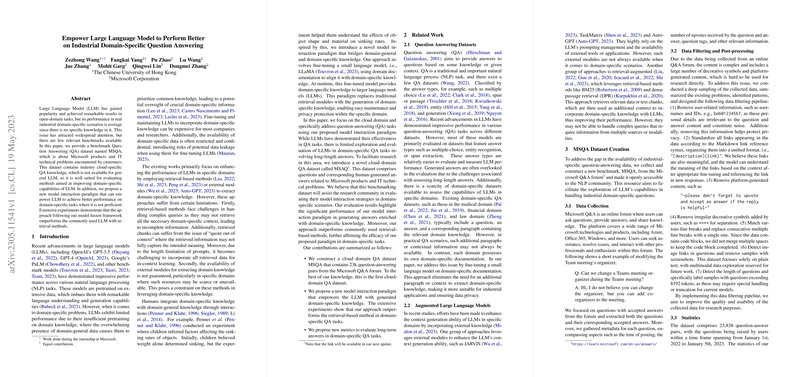

Introduction

A recent publication in the field of AI and natural language processing addresses the challenges LLMs face when dealing with domain-specific problems. Despite their vast knowledge and remarkable performance in various open-domain tasks, these models often fall short when it comes to domain-specific question answering (QA) due to their limited pretraining on specialized knowledge. This gap in performance has led to a surge of interest in methods that can fine-tune and improve LLMs' abilities in such contexts.

MSQA Dataset Creation

The researchers introduced a benchmark dataset called MSQA, concentrating on Microsoft products and IT technical issues. The dataset contains 32,000 QA pairs and is designed to test and enhance LLMs' domain-specific abilities. The MSQA highlights an area that is not extensively covered by general LLMs, specifically for evaluating industrial-domain question answering scenarios. The paper also notes the high cost and potential risks associated with data leakage during fine-tuning of LLMs, as access to domain-specific data is often limited and confidential.

Methodology

The proposed approach involves pre-training smaller LLMs on domain documentation to instill domain-specific knowledge. Subsequently, the model is fine-tuned using instruction tuning with an emphasis on QA tasks, leveraging the domain knowledge gained. The fine-tuned domain-specific model then assists the general LLM by providing relevant domain-specific information during runtime. This interaction paradigm circumvents the need for traditional data retrieval methods, making it easier to maintain privacy while staying updated with domain knowledge.

Experiment and Results

Through comprehensive experimentation, the proposed model interaction paradigm demonstrated enhanced performance over traditional retrieval-based methods when measured against standard and new evaluation metrics. The authors also introduced new metrics tailored for long-form QA tasks that align better with human evaluations. Importantly, the method showed significant improvements in generating contextually accurate domain-specific answers. The researchers have made the source code and sample data publicly available to foster further research in empowering LLMs within specific industrial domains.