Evaluating Object Hallucination in Large Vision-LLMs

The paper "Evaluating Object Hallucination in Large Vision-LLMs" addresses the challenge of object hallucination within large vision-LLMs (LVLMs). Recognizing the seamless fusion of language and vision modalities in LVLMs enhances their capability to undertake complex multimodal tasks. However, these models are noted to often hallucinate objects that are not present in the accompanying images, veering from the ground truth and potentially skewing interpretation and application of these models in real-world scenarios.

Problem Statement and Motivation

The concern over hallucination in LVLMs necessitates detailed scrutiny due to the significant ramifications it could entail. As LVLMs inherit the fervent ability of LLMs to process and interpret language, they also inherit some of their shortcomings, such as hallucination. This propensity presents hurdles, notably in real-time applications like autonomous vehicle navigation systems, where an instance of visual misinformation could lead to catastrophic outcomes. Hallucination, therefore, represents a detrimental variance, compromising both the fidelity and utility of these models.

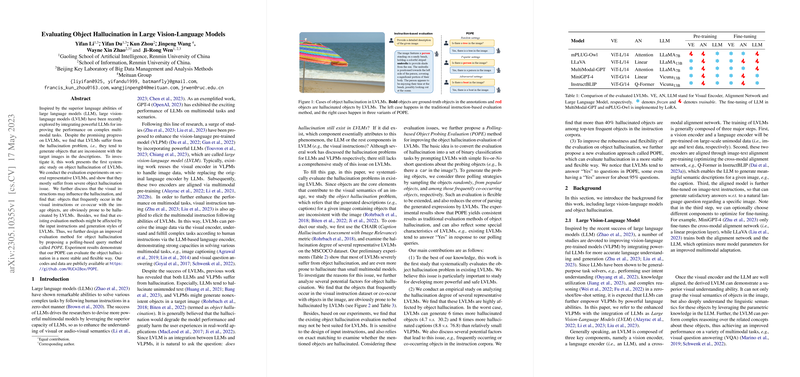

Evaluation of Existing LVLMs

The paper's backbone lies in systematically probing the hallucination tendencies of exemplary LVLMs, including LLaVA, MiniGPT-4, Multimodal-GPT, and mPLUG-Owl, across datasets predominantly centered on MSCOCO—widely acknowledged for its rich repository of labeled images and captions. Intriguingly, empirical results shine light on a prevalent trend: hallucination is markedly pervasive with these models, diverging significantly from the performance of smaller, more tailored vision-LLMs (VLPMs).

The analysis employed CHAIR metrics, capturing hallucination tentacles at both object instance and sentence levels, which unveiled extensive hallucination percentages. Such metrics, however, expose some limitations, particularly their sensitivity to instructional parameters and output length—a gap the authors aim to bridge with an alternative evaluation method.

Introduction to POPE

To counteract these limitations, the authors propose a novel evaluation strategy called Polling-based Object Probing Evaluation (POPE). Unlike previous approaches relying heavily on captions, POPE identifies hallucination through a series of binary classification encounters—the yes-or-no questions—which streamline and accentuate the evaluation process. By reverting the model evaluation process to a structured decision-making exercise, POPE delineates a reliable approach to mitigate the biases induced by instruction variation and output verbosity.

Findings and Discussions

A pivotal discovery is the influence of visual instruction data on hallucination, aggregated through fine-grained single occurrences and co-occurrence object probing. LVLMs exhibit a pronounced tendency to hallucinate objects frequently represented in or co-occurring within the training data corpus, suggesting that dataset composition is a crucial determinant in hallucination occurrence.

Furthermore, the research outlines the complexities and nuances associated with the hallucination phenomenon in models trained on extensive instruction datasets compared to those trained on data with concise command prompts.

Conclusion and Future Directions

This exploration offers a foundational framework to interrogate and refine the understanding of hallucination in LVLMs. Shifting the evaluation focus from an output-centric view to a query-based verification illustrates a promising path toward discerning and mitigating hallucination error in multimodal systems.

As future avenues, increasing the diversity and granularity of instructional data, alongside enhancing context-based reasoning, will likely fortify these LVLM systems against hallucination. Moreover, integrating automated segmentation insights with human-in-the-loop methodologies could yield a hybrid evaluation mechanism to further boost model performance precision and reliability.

In summation, by steering the academic discourse towards minimizing inherent hallucinations, this paper sets the stage for more efficacious and safer deployment of LVLM systems in diverse multimodal contexts.