Evaluation of Zero-Shot Ranking by LLMs for Recommender Systems

The paper investigates the efficacy of LLMs, such as GPT-4, in functioning as zero-shot rankers within recommender systems. This novel approach leverages the impressive task-solving ability of LLMs without additional training, recasting the recommendation task into a conditional ranking problem. Herein, the paper sheds light on the capacities and limitations of employing LLMs for ranking tasks in recommendation systems.

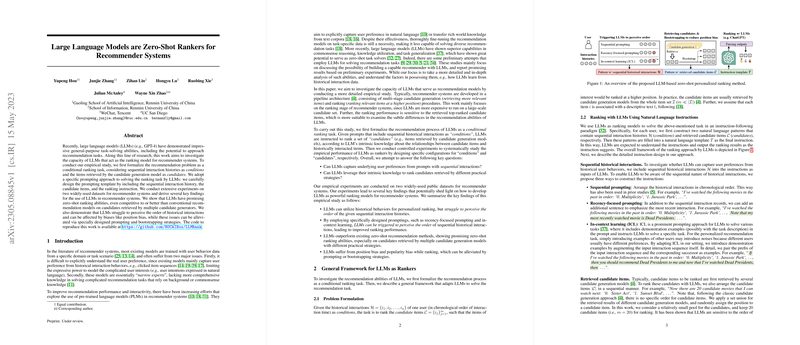

The authors formalize the recommendation problem wherein the sequential interaction histories are treated as conditions and the items retrieved by other models are viewed as candidates. The recommended task is thus a conditional ranking one, where LLMs are expected to rank items based on intrinsic knowledge. Through the construction of natural language prompts, this research examines whether LLMs can utilize historical user behaviors and understand user-item relationships for effective ranking.

Extensive experiments are conducted over two popular datasets using specifically designed prompting strategies. The findings are consolidated into key observations regarding the performance of LLMs as zero-shot rankers:

- Order Perception Challenges: LLMs typically struggle to ascertain the order of historical interactions. Consequently, novel prompting strategies were devised to cue LLMs to perceive interaction order, effectively leading to improved ranking outcomes compared to the baseline performance.

- Bias Issues: The order of candidate presentation significantly impacts LLM ranking performance, indicating a position bias. Furthermore, a predisposition towards recommending popular items (popularity bias) was observed. To mitigate these biases, strategies such as bootstrapping and tailored prompting were proposed, making the ranking results more robust.

- Effective Zero-Shot Ranking: The LLMs demonstrated promising zero-shot capabilities, particularly when candidates were derived from various generation models, indicating potential applicability in comprehensive candidate environments. The result suggests a strong aptitude for LLMs to leverage intrinsic knowledge from text features for ranking.

A battery of experiments indicated that LLMs, especially those with a larger parameter space, such as GPT-3.5 and GPT-4, outperformed other zero-shot recommendation methods by a substantial margin and even competed with conventional models specifically trained on datasets. This supports the research's premise that pre-trained LLMs hold substantial and untapped potential for improving recommendation tasks.

The paper situates its findings within the broader context of transfer learning for recommender systems, illustrating that LLMs have capacity beyond narrow-domain tasks, thanks to their pre-training on a vast corpus of language data. It underscores the limitations central to traditional recommendation models which LLMs could potentially ameliorate — particularly in cases where user alignment with candidate recommendation requires broader background knowledge.

While this work sheds light on leveraging LLMs' capabilities in the domain of recommenders, there are challenges such as computational overheads and the inherent biases from LLM training corpora. Future directions could encompass developing mechanisms that allow LLMs to incorporate user feedback for refining recommendations and building hybrid models integrating LLMs with traditional system architecture for improved, scalable performance.

In conclusion, the paper provides foundational insights that could be pivotal in evolving recommender systems into adaptable, context-aware engines capable of leveraging large volumes of semantic data without necessitating extensive re-training. This research opens avenues for advancing recommender system designs utilizing sophisticated LLM capabilities, marking a substantive exploration into AI-driven personalization.