Analyzing the Efficacy of LLMs in Inferring Psychological Dispositions from Social Media Data

This paper investigates the ability of advanced LLMs, specifically GPT-3.5 and GPT-4, to infer psychological dispositions as manifested through the Big Five personality traits based on users' social media activity. The paper aims to compare the inferred personality scores from these models with self-reported scores obtained through the International Personality Item Pool (IPIP) to assess the accuracy and reliability of such inferences.

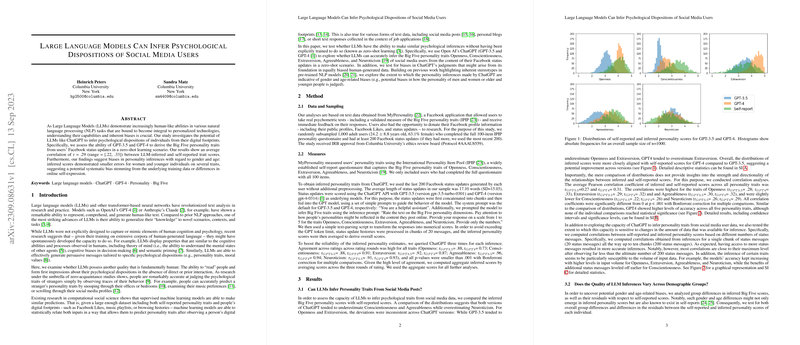

Descriptive Statistics and Comparative Outcomes

The paper provides descriptive statistics for the Big Five personality scores—Openness (O), Conscientiousness (C), Extraversion (E), Agreeableness (A), and Neuroticism (N)—derived from GPT-3.5 and GPT-4 models, alongside self-reports. Notably, GPT-4 demonstrates higher mean scores across the personality traits when compared to GPT-3.5, with particularly marked differences in Openness and Extraversion.

Correlational Analyses

The correlation between the model-inferred and self-reported scores provides a quantitative measure of the models' predictive validity. For both GPT-3.5 and GPT-4, correlation coefficients indicate moderate positive correlations, with GPT-4 consistently showing higher correlations across all traits compared to GPT-3.5. For instance, GPT-4's correlation for Openness was recorded at 0.327, compared to GPT-3.5’s 0.282.

Implications of Input Volume on Correlations

One significant aspect explored is how input message volume affects the strength of correlation between inferred and self-reported scores. The findings indicate that for both model versions, increasing the volume of input messages generally enhances the correlation accuracy, cementing the notion that more comprehensive data captures more nuanced personality dispositions.

Subgroup Analyses

The paper conducts subgroup analyses based on gender and age to understand demographic biases or strengths in model performance. Gender-based comparisons show that inferred personality scores diverge in some aspects from self-reports, with female users generally exhibiting higher mean scores for Agreeableness and Extraversion. Age analysis reveals age-dependent variances, notably where higher mean Conscientiousness is observed in older users for both GPT-3.5 and GPT-4.

Implications and Future Directions

The implications of this research underscore the potential of LLMs in psychological prediction applications, especially pertinent to fields such as digital marketing, personalized content delivery, and mental health assessment. The moderate positive correlations indicate a promising trajectory for LLMs in psychological profiling, yet they also highlight the necessity for careful consideration regarding data privacy, ethical implications, and algorithmic fairness, particularly concerning demographic variability as noted in subgroup analyses.

Future developments could focus on improving the robustness and interpretability of model outputs, potentially incorporating multi-modal data inputs to refine accuracy. Additionally, expanding the demographic diversity of training data might help mitigate observed biases and improve inference reliability across broader populations.

In conclusion, while this paper establishes a valuable proof-of-concept for the application of LLMs in inferring psychological traits, it also opens avenues for continued research and technical enhancements to advance the field of psychometrics in AI-driven environments.