Introduction

Recent discourse surrounding the capabilities of LLMs has predominantly focused on their merits as few-shot learners. In the domain of information extraction (IE), the efficacy of LLMs, particularly in few-shot contexts, is still very much in question. This paper scrutinizes the comparative advantage, if any, of LLMs over Small LLMs (SLMs) across range of popular IE tasks.

Performance Analysis of LLMs vs. SLMs

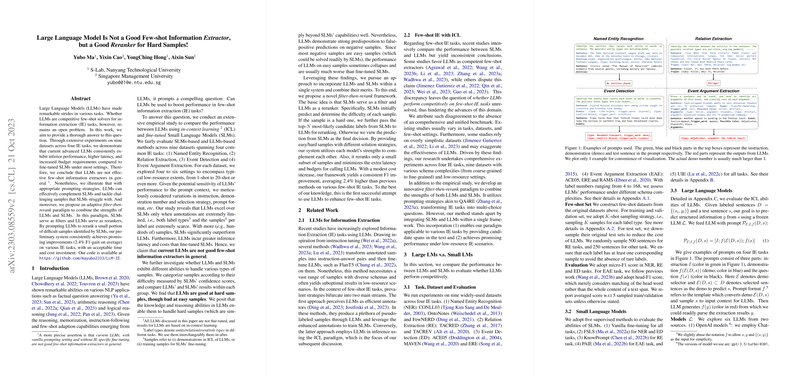

The comprehensive empirical evaluation conducted in this paper spans nine datasets across four canonical IE tasks: Named Entity Recognition (NER), Relation Extraction (RE), Event Detection (ED), and Event Argument Extraction (EAE). The researchers systematically compared the performance of in-context learning via LLMs against fine-tuned SLMs, adopting multiple configurations simulating typical real-world low-resource settings. Surprisingly, the overarching finding is that, except for extremely low-resource situations, LLMs fall short against their SLM counterparts. SLMs not only demonstrated superior results but also exhibited lower latency and reduced operational costs.

Probing The Efficacy of LLMs in Sample Difficulty Stratification

A core aspect of the research was dissecting the sample handling capabilities of both LLMs and SLMs. Through fine-grained analysis, LLMs were observed to handle complex or 'hard' samples adroitly, where SLMs would typically falter. This interestingly bifurcated pattern of competency suggests that while SLMs generally dominate, LLMs possess a niche capability that can be strategically exploited, particularly when SLMs struggle with certain difficult cases.

Adaptive Filter-then-rerank Paradigm

Leveraging the aforementioned insights, the authors innovatively propose an adaptive filter-then-rerank paradigm. In this framework, SLMs first filter samples by confidence, effectively bifurcating them into 'easy' and 'hard' categories. Subsequently, LLMs are prompted to rerank a focused subset of hard samples. This hybrid system, which refines the use of LLMs for IE tasks, consistently achieved an average of 2.4% F1 score improvement while maintaining acceptable time and resource investments—a compelling testament to the judicious combination of LLMs' strengths with the efficiency of SLMs.