Introduction to Generative IE

Generative Information Extraction (IE) is the process of obtaining structured knowledge, like entities, relations, and events, from unstructured textual data. It involves converting text into a structured form that is useful for various downstream applications such as knowledge graph construction, question answering, and knowledge reasoning. LLMs, with their advanced capabilities in text understanding and generation, have paved the way for new methodologies in IE, focusing on generating structured information rather than just extracting it.

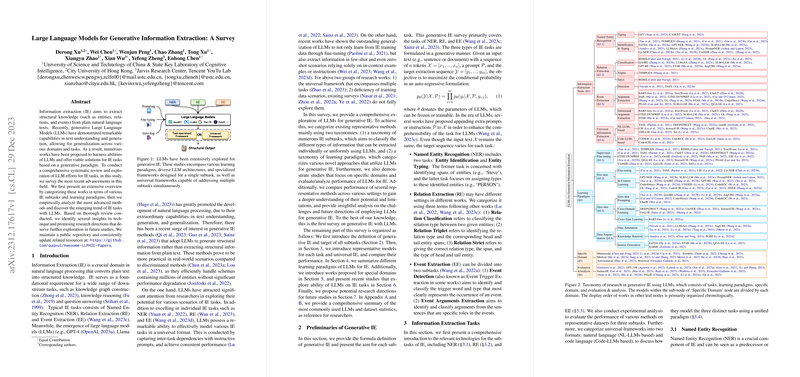

Recent Developments in LLMs for IE

LLMs have been integrated into IE tasks with impressive outcomes, and a variety of learning paradigms have been explored to improve their effectiveness. These include supervised fine-tuning, few-shot learning, and zero-shot learning. Each of these paradigms has its own set of strategies tailored to enhance LLMs' performance in IE. For instance, supervised fine-tuning extends a model's capabilities by leveraging existing datasets, while few-shot learning makes use of a small amount of labeled data to train the models effectively. Zero-shot learning employs models that can generalize to new tasks without any labeled examples. Another interesting development is the use of prompts, transforming the extraction process into a query-answering format that guides LLMs in producing the desired output.

The Future of LLMs in IE

This survey has identified several promising directions for future research, emphasizing the potential for the creation of universal frameworks that can handle various IE tasks and domains. Despite advancements, current methods sometimes struggle with long context inputs and structuring outputs in alignment with their training data. Improving the robustness and versatility of these methods is key to unlocking broader applications. Additionally, future research might explore more innovative prompt design strategies that enable LLMs to comprehend tasks more effectively and produce reliable output. Lastly, OpenIE poses unique challenges, suggesting that further investigation is needed to better leverage the knowledge and reasoning abilities of LLMs.

Conclusion

This survey contributes to the understanding of how LLMs are shaping the field of generative IE. By categorizing recent studies according to their learning paradigms and IE tasks, it offers insights into how these models are being fine-tuned, how few-shot or zero-shot learning is applied, and how data augmentation impacts performance. The research suggests that LLMs have much potential in IE, but also underscores the need for ongoing exploration to address existing constraints and unlock the full capabilities of these powerful models.